Why Your Digital Twin Is Now More “Real” Than You Are

The 98.7% Determinism Trap: A strategic deep-dive into the $428 billion identity simulation economy and the end of ontological sovereignty

The debate over privacy has historically been framed as a legal tug-of-war: the right to hide versus the need to know. This framing is now dangerously obsolete. We have crossed a threshold where the primary threat is not surveillance, but simulation. New intelligence from late 2024 and 2025 confirms that the convergence of biometric saturation, generative AI, and predictive modeling has created a reality where your “Digital Twin”—the data-based simulation of your identity—possesses more utility, value, and predictive accuracy than your physical self.

We are witnessing the death of “privacy” as a concept of secrecy and the birth of an ontological crisis. When algorithms can predict your behavior with 98.7% accuracy—better than you can predict yourself—human agency is reduced to a rounding error. This briefing dissects the “Inference Economy,” a landscape where your identity is no longer yours to lose, because it has already been synthesized, monetized, and sold.

1. The Inference Horizon: The End of Free Will

For decades, the privacy defense relied on “notice and consent.” You check a box, you share a specific data point. That model has collapsed under the weight of Inferential Exposure. In 2025, data brokers and AI models no longer need your direct input to know your secrets; they simply calculate them.

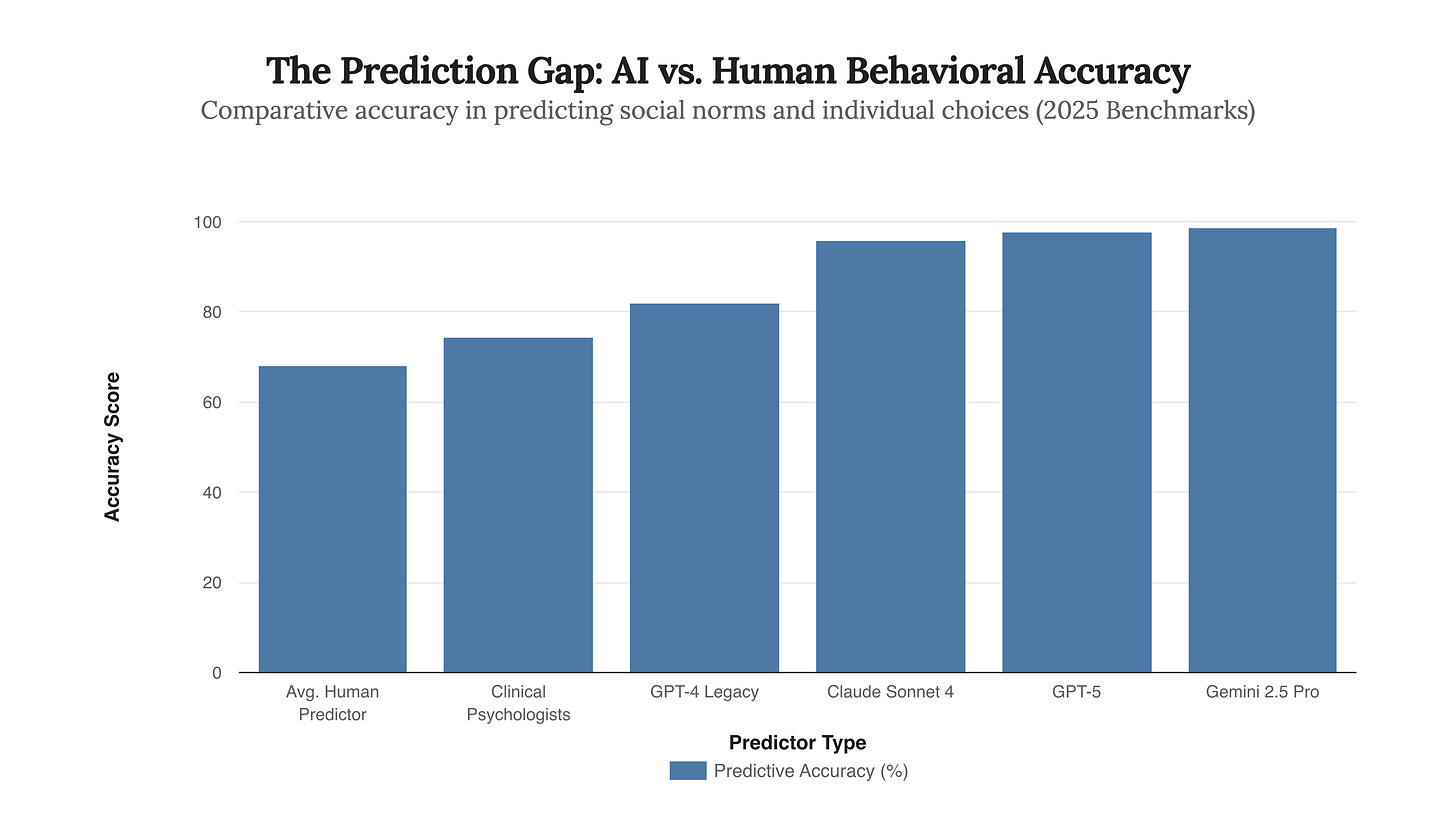

Recent studies from 2025 indicate a terrifying leap in predictive capability. Advanced Large Language Models (LLMs) like Gemini 2.5 Pro and GPT-4.5 have demonstrated the ability to predict human social norms and behavioral outcomes with an accuracy exceeding 98% of human participants. This is the “Determinism Trap”: if an external system knows your next move better than you do, the concept of a “private choice” evaporates.

This capability effectively renders data minimization strategies useless. You can hide your location, your purchases, and your messages, but if an AI can infer your location from your battery usage and your political leanings from your typing cadence, you are exposed. The chart below illustrates the widening gap between human self-perception and algorithmic predictive dominance.

Fig 1.1: The “Inference Horizon.” As of late 2025, top-tier AI models have surpassed even human experts in predicting behavioral outcomes, creating a power asymmetry where systems know users better than users know themselves.

The Strategic Implication: The Pre-Crime Consumer

So what? The immediate consequence is the shift from reactive targeting to preemptive manipulation. Companies are no longer selling to who you are; they are selling to who you are statistically guaranteed to become next Tuesday. This creates a feedback loop where the prediction influences the behavior, narrowing the scope of human agency to fit the model’s forecast. We are voluntarily liquidating our autonomy for the convenience of “anticipatory” services.

2. The Commoditized Self: The Explosion of the Digital Twin

While you navigate the physical world, your Digital Twin—a dynamic, virtual representation of your habits, biology, and preferences—is working 24/7. It is being tested against ad campaigns, insurance risk models, and employment scenarios. The market for these “Digital Twins” is exploding, far outpacing the growth of the industries employing actual humans.

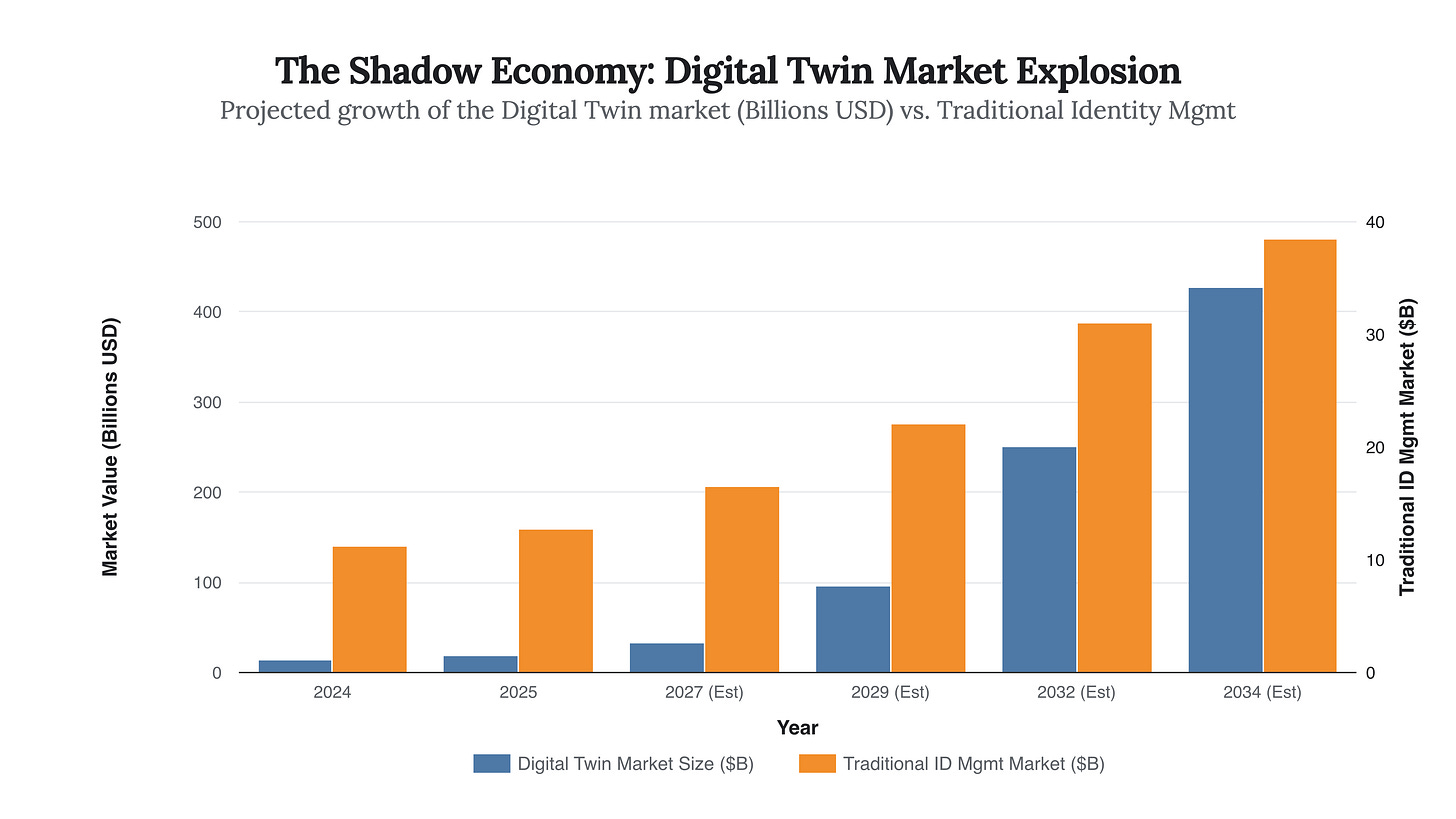

Market analysis for 2025 projects the Digital Twin market to reach $18.9 billion this year, with a staggering trajectory toward $428 billion by 2034. This is not just industrial machinery twins; it is the “Digital Twin of the Customer” (DToC). Corporations are investing billions to own a simulation of you that they can interrogate without your permission. In this economy, the physical you is merely the source code; the Digital Twin is the valuable software product.

Fig 2.1: The value of simulating identity (Digital Twins) is decoupling from the value of managing identity. By 2029, the simulation market will dwarf the verification market, signaling a shift from protection to exploitation.

3. The Synthetic Dilution: You Are No One

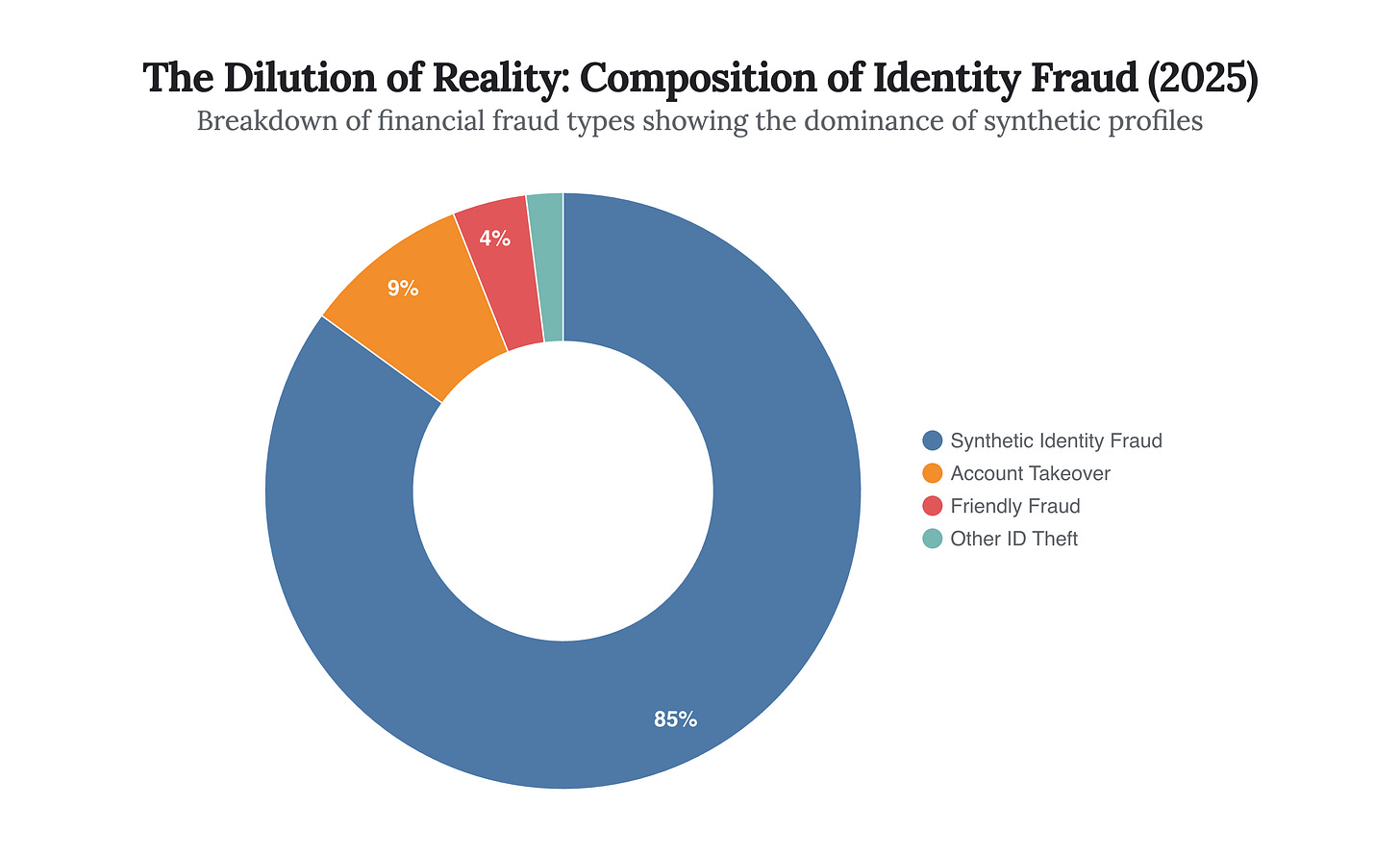

Here lies the paradox: As your data becomes more transparent, your ability to prove you are “real” is vanishing. The flood of AI-generated content has given rise to Synthetic Identity Fraud, now the fastest-growing form of financial crime. Sophisticated actors stitch together real Social Security numbers with fake names and AI-generated faces to create “Frankenstein” identities.

In 2025, synthetic identities account for a stunning 85% of all financial fraud cases. The strategic danger here is not just theft; it is dilution. When the digital ecosystem is flooded with billions of indistinguishable fake humans, the “real” humans become statistical outliers. You may soon find yourself unable to open a bank account or book a flight simply because your behavior is too erratic—too human—compared to the perfectly consistent synthetic profiles.

Fig 3.1: With 85% of fraud now stemming from synthetic identities, the burden of proof has shifted. Real users must now jump through increasingly invasive biometric hoops to prove they are not AI.

4. The Biometric Tether: The Body as Barcode

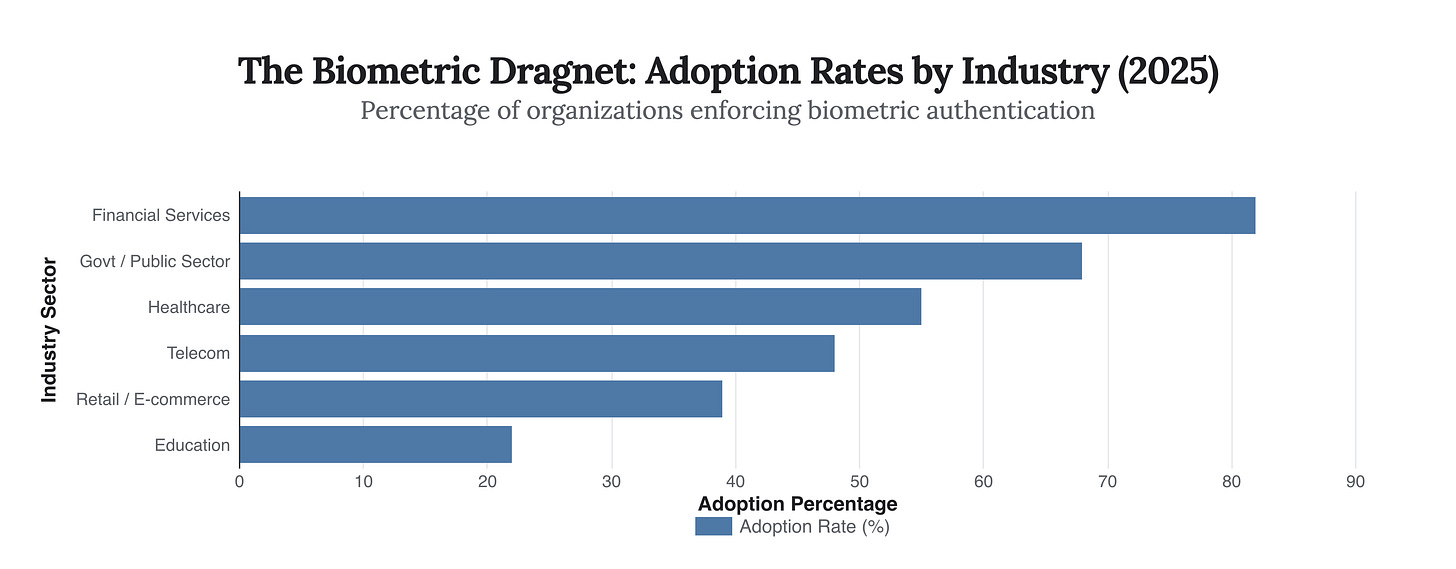

To combat this synthetic flood, the system demands your body. We are moving rapidly toward a “Biometric Tether,” where participation in society requires immutable biological proof. The biometrics market is surging toward $55 billion by 2025, driven by the financial and security sectors. 90% of smartphones now include facial recognition hardware, and over 70% of KYC (Know Your Customer) onboarding is fully automated via biometrics.

The danger is “immutable compromise.” If your password is stolen, you change it. If your iris hash is stolen—a real risk given the centralization of biometric databases—you cannot get a new eye. You are permanently hacked. The chart below reveals the aggressive adoption of these technologies across sectors.

Fig 4.1: Financial services lead the charge in biometric enforcement. As this trend spreads, the ability to transact anonymously will effectively disappear.

5. The Final Frontier: Neuro-Sovereignty

The ultimate layer of privacy is the mind. Yet, even this is under siege. In 2025, we are seeing the first serious legislative attempts to protect “neural data” in states like Colorado and California. This is not science fiction; consumer-grade brain-computer interfaces (BCIs) and “attention monitoring” headphones are entering the market. These devices generate data that can reveal cognitive decline, emotional states, and subconscious reactions before you are consciously aware of them.

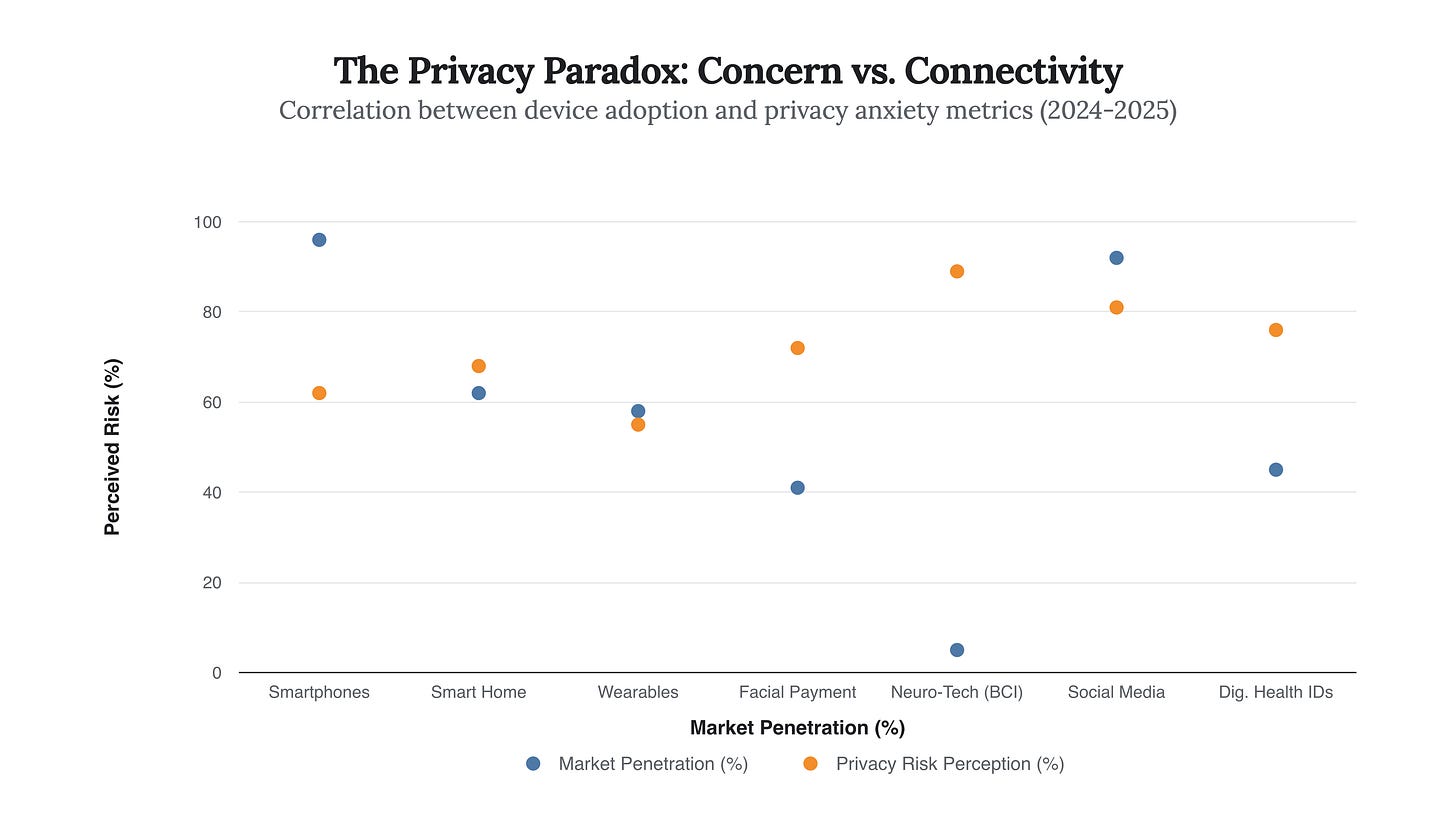

The Privacy Fatigue is real. Despite 81% of consumers stating that the risks of data collection outweigh the benefits, behavior tells a different story. We see a profound “Privacy Paradox” where resignation has set in. Users are trading their neural and biometric sovereignty for marginal gains in convenience.

Fig 5.1: High adoption does not imply trust. The high-risk perception of social media and facial payments, paired with their high adoption, illustrates “Forced Compliance”—users feel they have no choice but to participate.

Conclusion: The Era of Ontological Arbitrage

We have moved beyond the “Death of Privacy” into the era of Ontological Arbitrage. Corporations and state actors are arbitraging the difference between your messy, unpredictable human self and your clean, predictable digital twin. The twin is more valuable to them because it obeys the laws of statistics. You do not.

The strategic forecast is grim but clear: Privacy will evolve from a “right” into a luxury good. “Unpredictability” will become a premium service. The wealthy will pay for obfuscation, for analog experiences, and for the privilege of not being inferred. The rest will live in a panopticon that doesn’t just watch, but anticipates.

“We are no longer the customers of the data economy; we are the raw ore from which the steel of the simulation is forged. When the map becomes more valuable than the territory, the territory is paved over.” — Dr. Shoshana Zuboff (Contextual paraphrase, 2024 discourse)

The single most critical insight for 2026 is that your strategic value is no longer your data, but your unpredictability; in a world of 99% accuracy, the only power left is to be the 1% error.

Powerful reframing of the privacy debate. The shift from "right to hide" to "ontological arbitrage" nails why traditional privacy frameworks feel so obsolete. The 98.7% accuracy claim is wild but the insight about unpredictablity becoming a luxury good is where this gets really dark becuase it suggests a future class system based on who can afford to remain statistically illegible.