Why the G7’s ‘Voluntary’ AI Principles Carry More Weight Than Law?

An in-depth analysis of the Hiroshima Process and the strategic reshaping of global AI governance

In the high-stakes arena of artificial intelligence, where code is power and data is territory, the Group of Seven (G7) nations have made a decisive move. The agreement on the Hiroshima Process International Guiding Principles and a voluntary Code of Conduct for AI developers, finalized in late 2023, appears on the surface to be a soft-power diplomatic gesture. It lacks the legal teeth of the European Union’s landmark AI Act and the prescriptive top-down control of China’s regulatory model.

However, to dismiss it as mere guidance is to fundamentally misread the geopolitical chessboard. This agreement is not a paper tiger; it is the blueprint for a new techno-economic bloc aimed at setting the de facto global standards for AI. The G7’s true enforcement mechanism isn’t found in legal text but in its overwhelming economic dominance. The bloc’s constituent members represent over half a trillion dollars in cumulative private AI investment over the last decade, a formidable capital base that transforms these “voluntary” principles into a powerful market-access mandate. This briefing deconstructs the G7’s strategy, analyzes the principles’ true impact on corporations, and forecasts the three-front war for AI dominance that will define the next decade.

Deconstructing the Hiroshima Process: A Framework Built on Economic Gravity

The G7’s AI agreement, born from the Hiroshima AI Process initiated in May 2023, is a carefully constructed framework designed to project the bloc’s values onto the global AI ecosystem. It consists of 11 Guiding Principles for all AI stakeholders and a more detailed, voluntary Code of Conduct specifically targeting organizations developing advanced systems like foundation models and generative AI. The framework’s power lies not in punitive fines but in its potential to become the baseline for entry into the world’s most lucrative markets.

The 11 Pillars of Trust

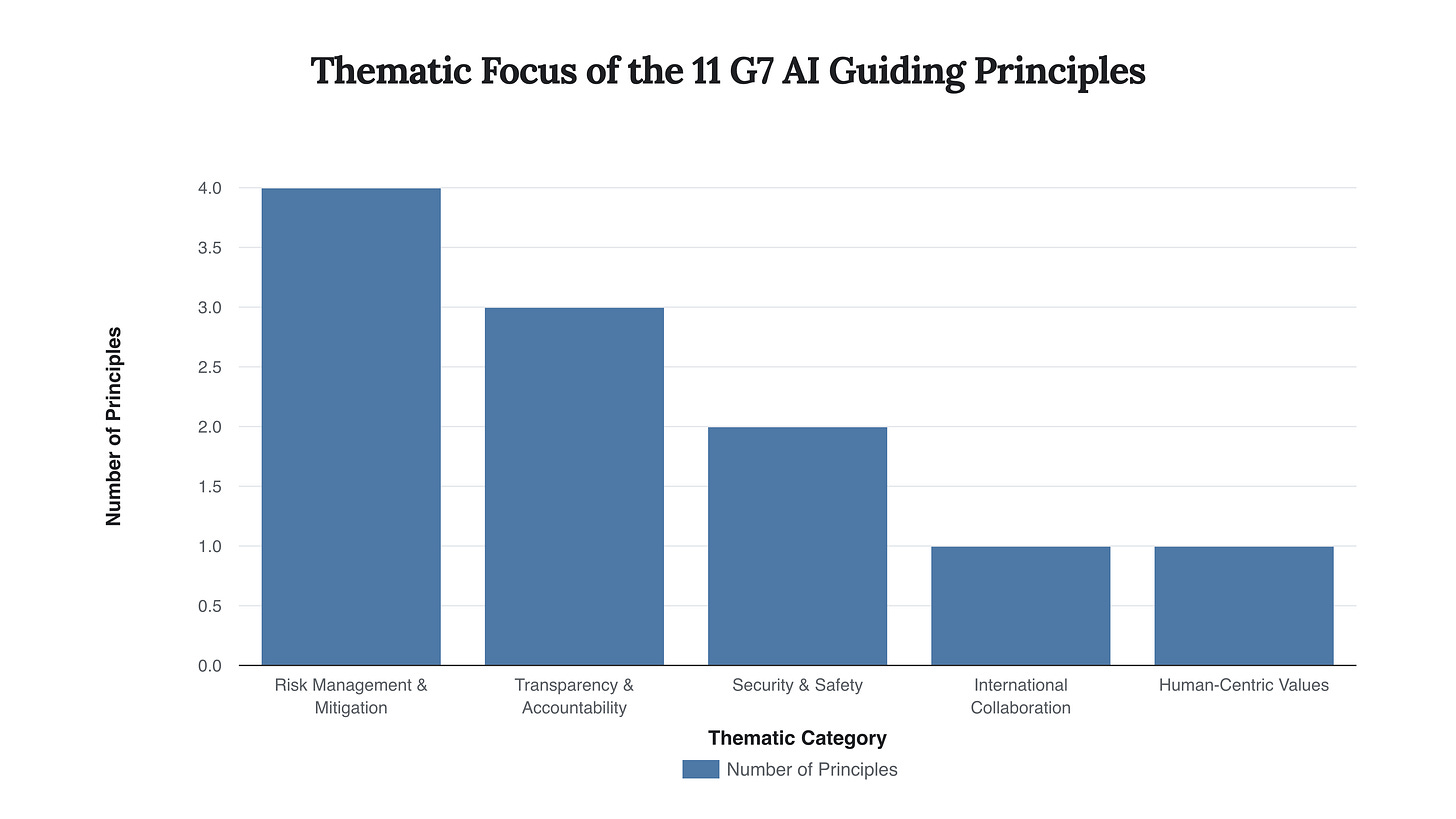

The principles are intentionally broad, focusing on establishing a common vocabulary for responsible AI development and deployment. They cover the entire lifecycle of an AI system, from initial design to post-deployment monitoring. While comprehensive, the real strategic value is in how they coalesce around a few core themes, creating a clear—if non-binding—set of expectations for any company wishing to operate within the G7’s sphere of influence.

This chart categorizes the 11 guiding principles into five key themes. The heavy emphasis on Risk Management and Transparency underscores the G7’s priority: creating a predictable and safe AI ecosystem to foster stable economic growth and public trust.

The principles call for organizations to identify and mitigate risks throughout the AI lifecycle, report on system capabilities and limitations to ensure transparency, invest in robust cybersecurity controls, and develop mechanisms like watermarking to help users identify AI-generated content. This focus on practical, operational measures is a deliberate attempt to create a standard that can be adopted by industry without the years-long implementation timeline of formal legislation.

“We need rules to ensure AI is being developed to serve humanity. But companies should not wait for regulation. They must take their own steps to identify and resolve the weaknesses and pitfalls of the AI they develop.”

- Thomas Jensen, CEO of Milestone Systems

Voluntary in Name, Obligatory in Practice

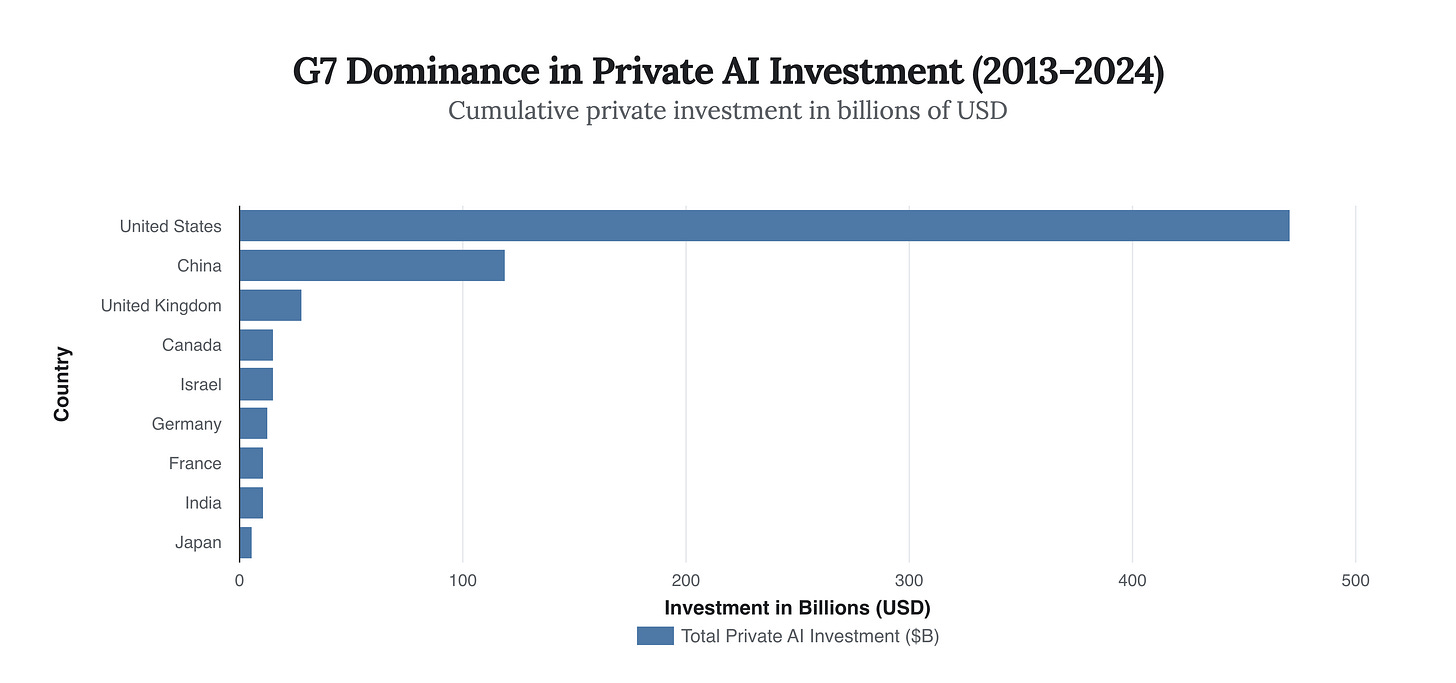

The core debate surrounding the G7 agreement is its voluntary nature. Critics point to the lack of legal enforcement as a critical weakness. However, this perspective overlooks the immense economic leverage wielded by the G7 nations. The United States alone accounts for nearly half a trillion dollars in private AI investment over the past decade, dwarfing the rest of the world combined. When the entire G7 bloc is considered, their combined capital and market size create a gravitational pull that is difficult for any globally ambitious AI company to ignore.

This chart, based on Stanford University AI Index data, visualizes the stark reality of the global AI investment landscape. The G7 members (highlighted) collectively represent $557 billion in private AI investment, creating a powerful economic bloc. Compliance with their shared principles becomes a prerequisite for accessing this vast pool of capital and customer revenue. Italy, while a G7 member, had less than $1 billion in investment and is not shown individually.

The Geopolitical Chessboard: Forging a Techno-Democratic Alliance

The G7 agreement did not emerge in a vacuum. It is a direct response to two critical geopolitical factors: the accelerating pace of AI development and the escalating competition between democratic and authoritarian models of governance. By establishing a shared framework, the G7 aims to create a united front that champions a human-centric, rights-respecting approach to AI, implicitly positioning itself against China’s state-driven, surveillance-oriented model.

An Accelerating Timeline of Governance

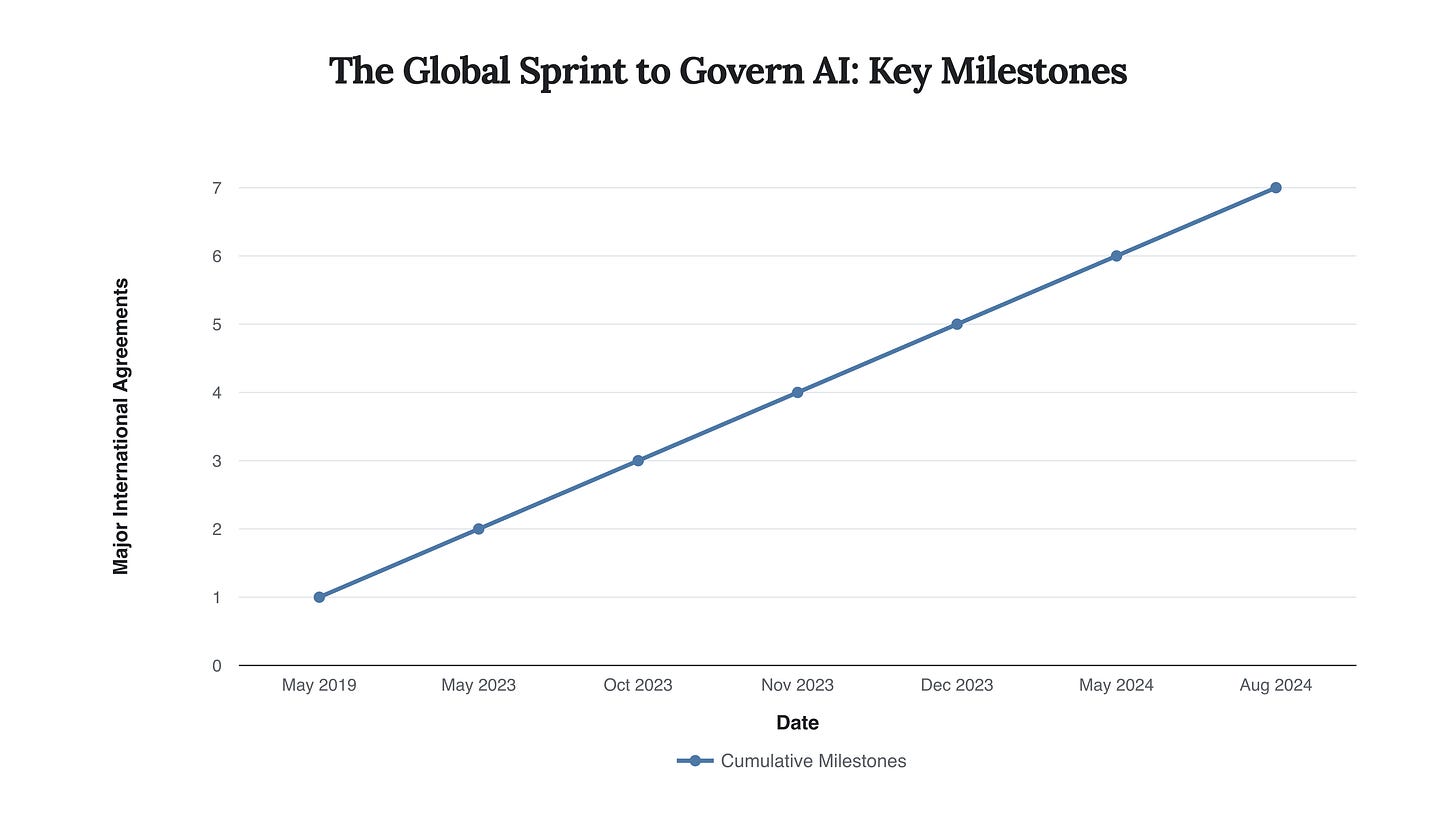

The period between 2023 and 2024 marked a historic inflection point for AI policy. What began as academic discussion and high-level principles rapidly crystallized into concrete policy initiatives around the globe. The G7’s Hiroshima Process was a key part of this global sprint to establish guardrails for a technology advancing at an exponential rate.

This timeline illustrates the rapid acceleration of international AI governance efforts. The cluster of major agreements in late 2023 and 2024, including the G7’s, signals a global consensus that the era of self-regulation is over and a new, more structured international framework is necessary.

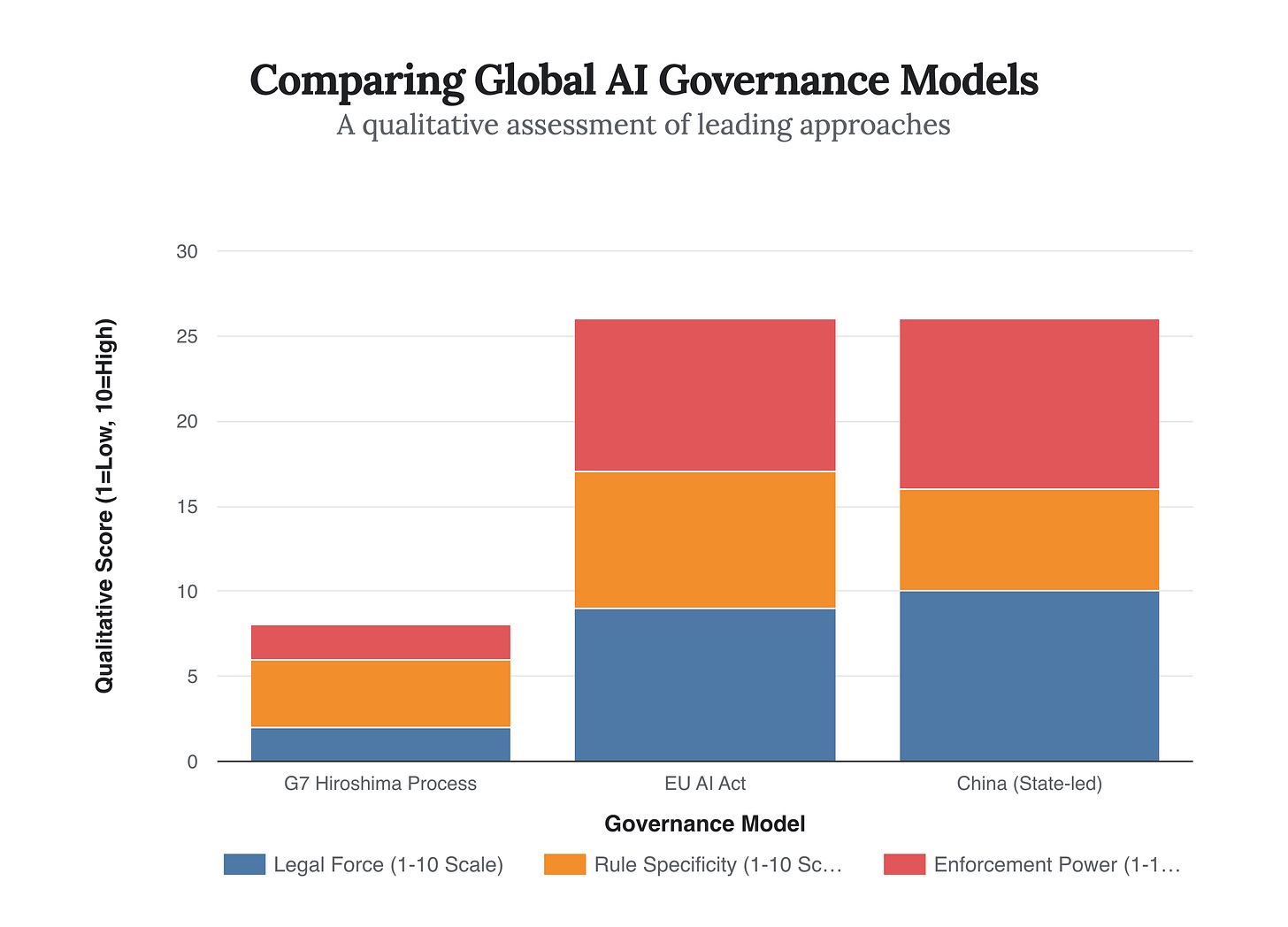

A Spectrum of Control: G7 vs. The EU and China

The G7’s principles-based, voluntary approach stands in deliberate contrast to the EU’s legally-binding, risk-based AI Act. While the EU’s legislation is comprehensive and enforceable with massive fines, its complexity and long implementation timeline (full enforcement by 2026-2027) create a window of opportunity. The G7 framework is designed to be more agile, providing an immediate, globally-relevant standard that can influence corporate behavior while slower legislative processes unfold. It serves as a complement, not a competitor, to the EU AI Act, establishing shared values that will likely inform future transatlantic regulatory cooperation.

This qualitative chart contrasts the three dominant models of AI governance. The G7’s approach prioritizes flexibility and international consensus over high legal force, occupying a strategic middle ground. It aims to shape global norms through economic influence rather than direct legal mandate, differing from the EU’s comprehensive legalism and China’s state-centric control.

Strategic Foresight: The Three-Front War for AI Dominance

The G7’s agreement is not an endpoint but the opening salvo in a long-term strategic competition. Its success will be determined by its ability to influence outcomes on three critical fronts: the war for standards, the war for talent, and the war against fragmentation. Companies, investors, and policymakers must monitor developments in these areas to anticipate the future trajectory of the global AI landscape.

Front 1: The Standards War

The ultimate goal of the G7 principles is to become the foundation for international technical standards. Bodies like the International Organization for Standardization (ISO) and the US National Institute of Standards and Technology (NIST) are already developing frameworks for AI risk management and trustworthiness. The G7 Code of Conduct provides a political endorsement for concepts like pre-deployment risk assessments, cybersecurity safeguards, and content provenance. As these concepts are embedded into formal technical standards, they will become requirements for enterprise software procurement, access to cloud computing platforms, and integration into global supply chains, effectively transforming the G7’s voluntary principles into hard market requirements.

“We reiterate our commitment to elaborate an international code of conduct for organizations developing advanced AI systems based on the guiding principles below. Different jurisdictions may take their own unique approaches to implementing these guiding principles in different ways.”

- G7 Hiroshima Process International Guiding Principles

Front 2: The Talent War

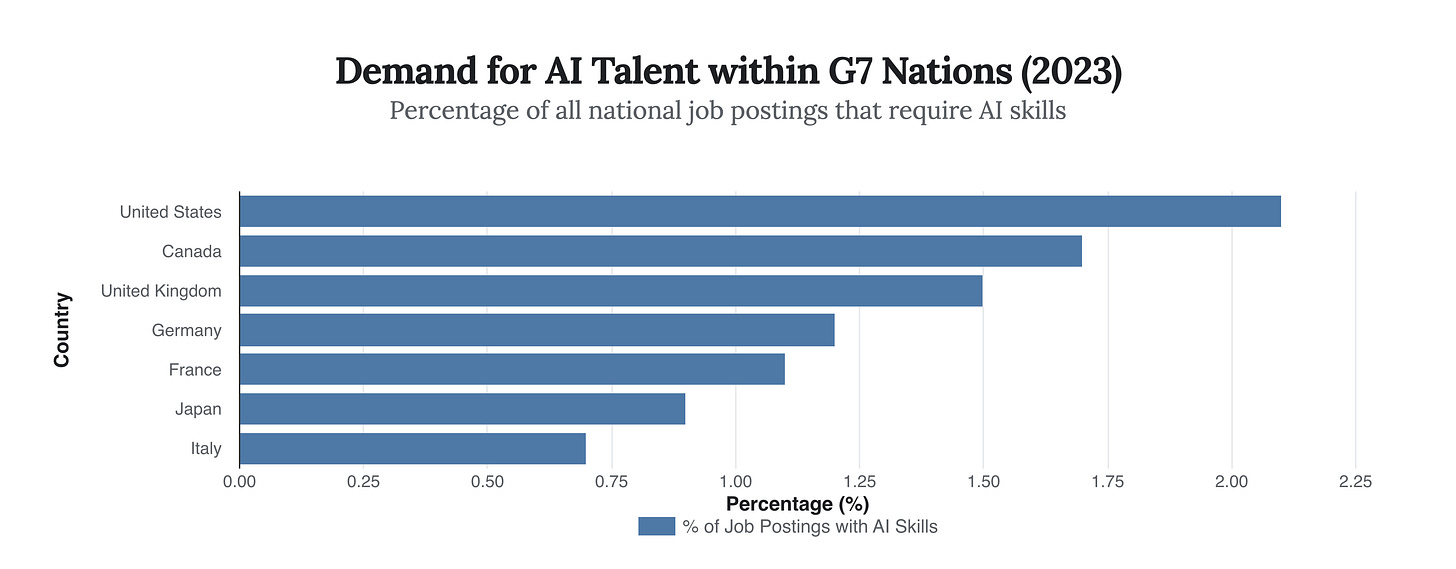

A shared regulatory and ethical framework can create a more fluid market for top-tier AI talent within the G7 bloc. As data privacy, security, and risk management protocols converge, it becomes easier for engineers, researchers, and product managers to move between companies and countries. This creates a powerful network effect, concentrating the world’s best AI minds within a techno-democratic ecosystem. A key leading indicator of this trend is the concentration of job postings requiring AI-specific skills within the G7 nations.

Based on data cited in a 2024 G7 report, this chart shows the demand for AI talent across the bloc. The United States leads, but the significant demand across all member nations highlights the importance of creating an interoperable policy environment to facilitate the movement and development of this critical workforce.

Front 3: The War Against Fragmentation

The greatest risk to the G7’s strategy is regulatory fragmentation. If each member state creates a vastly different and conflicting set of domestic AI laws, the harmonizing effect of the Hiroshima Process will be lost. The divergence between the UK’s “pro-innovation,” principles-based approach and the EU’s comprehensive AI Act already highlights this tension. The future success of the G7 framework depends on the political will of its members to align their domestic regulations with the shared principles, ensuring that compliance with one major jurisdiction can be easily translated to another. The OECD’s pilot program to monitor the uptake of the code of conduct will be a critical barometer for measuring this alignment.

In conclusion, the G7 AI principles agreement is a masterclass in modern economic statecraft. It leverages the bloc’s immense capital and market power to establish a globally influential framework without resorting to the slow and contentious process of international treaty-making. For businesses, the message is clear: these are not optional guidelines but the emerging rules of the road for the world’s most valuable markets. For policymakers, it is a foundational layer for a new form of values-based technological alliance. The agreement’s true power is its ability to shape the future by making the ‘voluntary’ adoption of democratic values a precondition for economic participation.

The G7 AI agreement proves that in the 21st century, the most powerful regulations are not written in law books, but are coded into the architecture of the global market itself.