Why Instagram Valued a Teen Life at $270

Unsealed documents reveal the grim calculus behind the “17-strike” predator policy

In the high-stakes world of Silicon Valley valuations, users are usually just abstract data points. But late last year, a specific number emerged from the unsealed court documents of a massive multi-state lawsuit against Meta that turned the abstract into the terrifyingly concrete: $270. This was the internal “lifetime value” assigned to a 13-year-old user. It is a figure that has become the Rosetta Stone for understanding why Instagram ignored its own safety warnings for years.

For the past few months, a second wave of leaked and unsealed documents has eclipsed the initial 2021 “Facebook Files” revelations. While the world previously knew Instagram was harmful to mental health, these new filings—stemming from lawsuits by 33 states—reveal that the negligence was not just accidental, but quantifiable. The data paints a picture of a company that had precisely calculated the cost of safety and decided it was too expensive to pay.

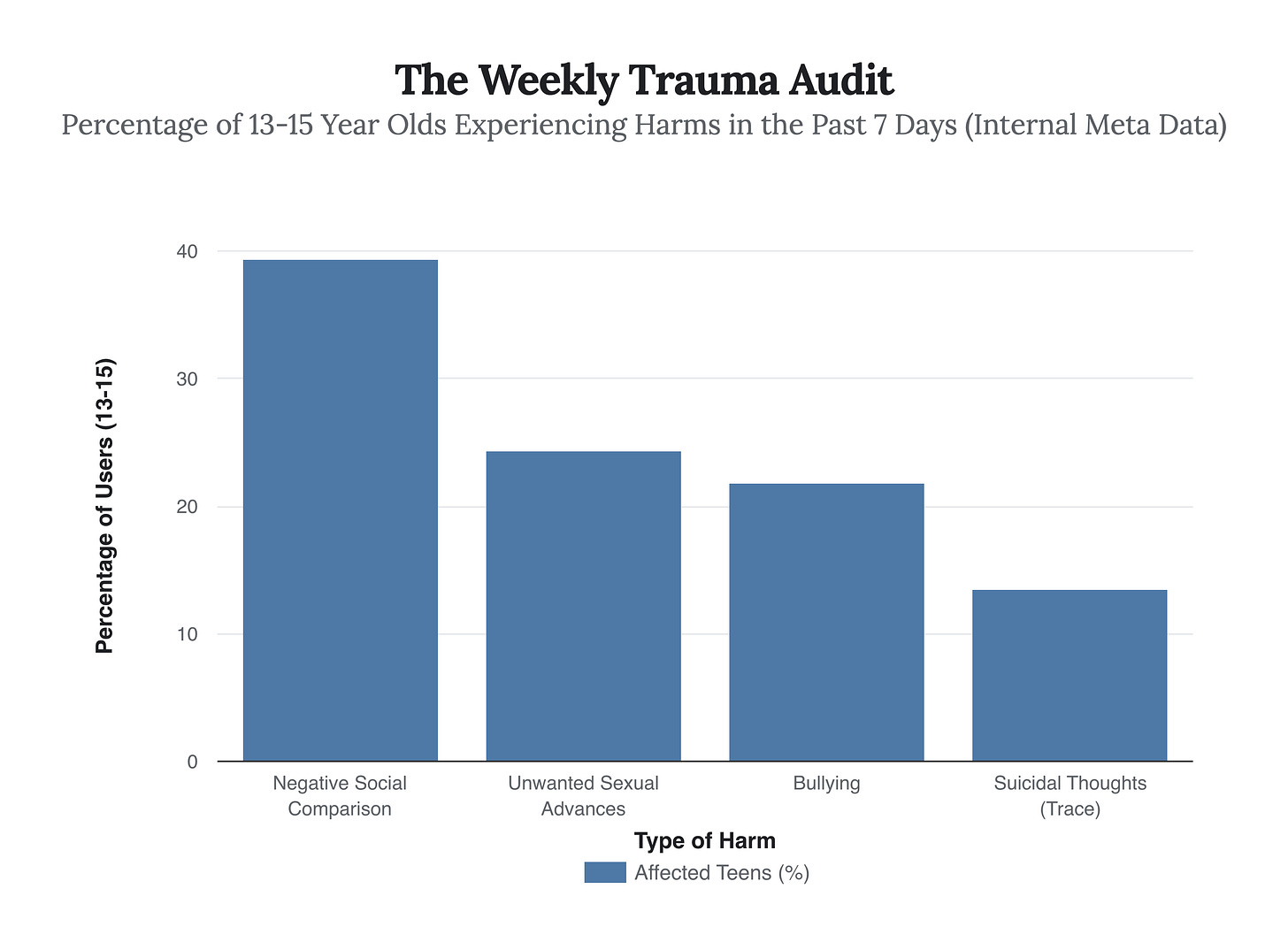

The most damning evidence comes from the testimony of Arturo Béjar, a former engineering director turned whistleblower, and recent audits of Instagram’s safety tools. The numbers are no longer vague estimates of “sadness”; they are specific, weekly audits of trauma. Béjar’s internal data revealed that nearly a quarter of young teenagers on the platform were being subjected to unwanted sexual advances not once in a lifetime, but in a single week.

These figures represent a catastrophic failure of product safety. Imagine a physical playground where 24.4% of children were sexually propositioned by strangers every week. The park would be bulldozed immediately. Yet, the unsealed documents show that Meta executives were not only aware of these statistics but had built policies that effectively insulated the perpetrators.

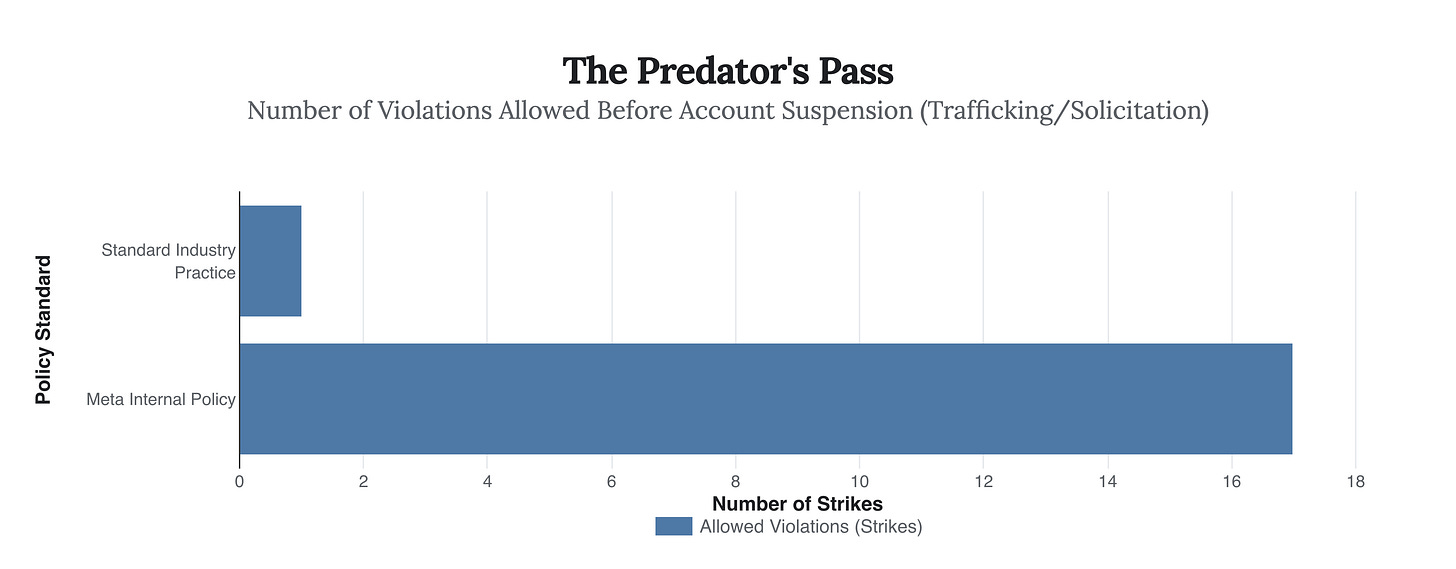

Perhaps the most visceral example of this policy failure is the “Strike Threshold.” In most digital environments, a “Zero Tolerance” policy for child sexual exploitation is the standard—one strike and you are out. Unsealed testimony from Vaishnavi Jayakumar, Instagram’s former head of safety and well-being, revealed a different reality inside Meta. For accounts reported for sex trafficking and solicitation, the company allegedly enforced a 17-strike policy before suspension.

The gap between the standard industry practice of immediate suspension and Meta’s 17-strike allowance is where the danger lies. It provided a long runway for predators to operate with impunity, shielded by a system that required an absurd burden of proof from victims before taking action. Why would a company allow this? The answer brings us back to the $270 figure and the “Mission Critical” directive.

Internal documents from Meta’s growth team explicitly stated that “acquiring new teen users is mission critical.” When safety researchers proposed making teen accounts “private by default” to cut off adult strangers, the growth team ran the numbers. They calculated that such a move would cost Instagram approximately 1.5 million monthly active teens per year. In the cold calculus of the boardroom, the retention of those 1.5 million teens—and their $270 lifetime values—outweighed the safety of the collective user base.

“You could incur 16 violations for prostitution and sexual solicitation, and upon the 17th violation, your account would be suspended... by any measure across the industry, [it was] a very, very high strike threshold.”

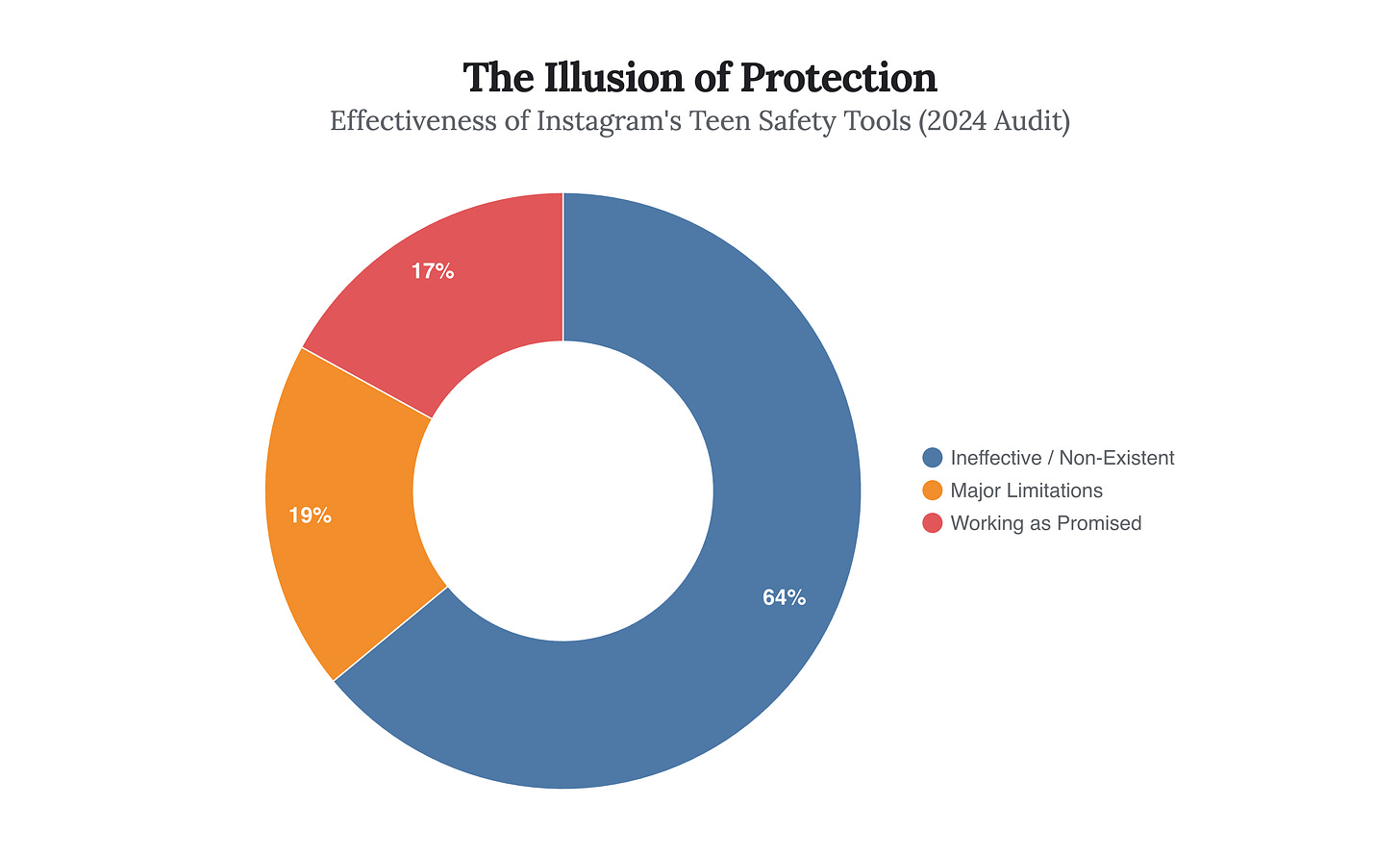

Even when Meta did implement safety tools, they were often little more than digital theater. A blistering 2024 report by the Molly Rose Foundation, which audited 47 of Instagram’s heralded safety features, found that the majority were functionally useless. They were either easy to bypass, effectively broken, or placed the entire burden of safety on the child rather than the system.

The data from these unsealed documents tells a story of a “growth at any cost” culture that viewed teen safety features not as essential protections, but as friction to be minimized. With 64% of safety tools failing to work as promised, parents were left with a false sense of security while their children navigated a platform optimized for engagement over well-being.

The revelation that a teen’s safety was weighed against a $270 lifetime value offers a grim insight into the mechanism of the crisis. It suggests that the trauma experienced by millions of teens was not an unforeseen accident, but an “externality”—a cost of doing business that was accepted to preserve the bottom line. As regulators and courts sift through the remaining millions of pages of documents, the question is no longer what Meta knew, but exactly how much profit was required to silence their moral compass.

Stunning investigative work on the strike threshold disparity. That 17-strike policy basically created a permission structure for predators to refine their tactics across multiple victims before facing consequences. The $270 lifetime value calculation makes it painfully clear this wasn't oversight, it was a decison to treat harm as an acceptable cost of acquisition. I've worked with families dealing with fallout from these platforms and the damage compounds way faster than a 17-attempt runway would suggest.