The Transparency Gauntlet: The EU’s High-Stakes Regulatory Showdown with Meta and TikTok

An Intelligence Briefing on the Digital Services Act’s First Major Enforcement Test and its Geopolitical Shockwaves

The European Union’s ambitious bid to regulate Big Tech has entered its most critical phase. Recent preliminary findings by the European Commission assert that both Meta (encompassing Facebook and Instagram) and TikTok are in breach of their transparency obligations under the landmark Digital Services Act (DSA). This action is not merely a procedural step; it is the first major stress test of the DSA’s enforcement power, setting a precedent for digital governance with global implications.

The core of the issue revolves around restricted access to platform data for vetted researchers and cumbersome, potentially deceptive, mechanisms for users to report illegal content. For industry leaders, investors, and policymakers, understanding the nuances of this conflict is paramount. It signals a new, legally mandated era of algorithmic accountability, where the ‘black box’ of platform operations is being forcibly pried open. The outcome will redefine the operational playbooks for tech giants in one of the world’s largest markets and influence regulatory frameworks worldwide.

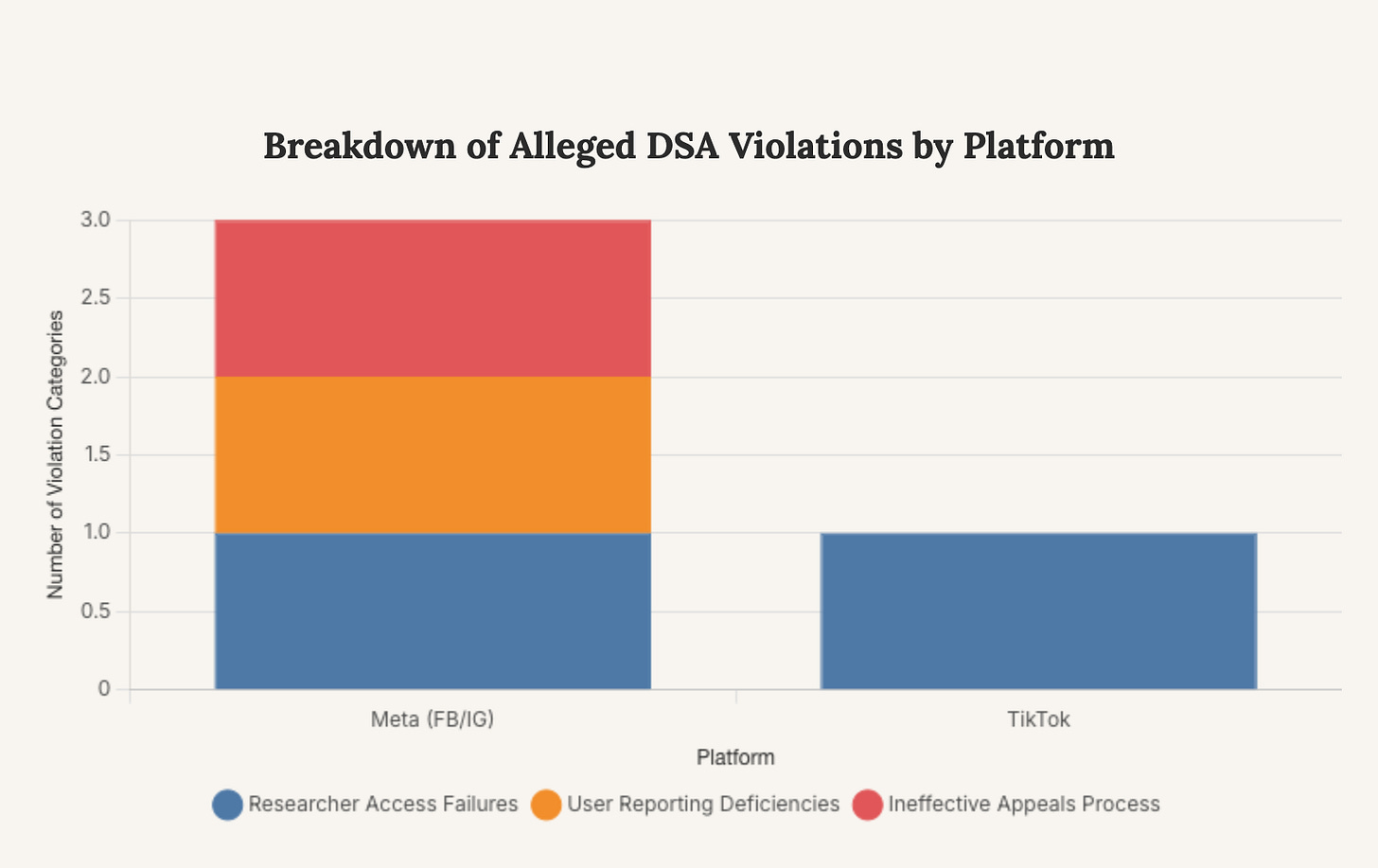

The Anatomy of Non-Compliance: Where Brussels Alleges the Platforms Fall Short

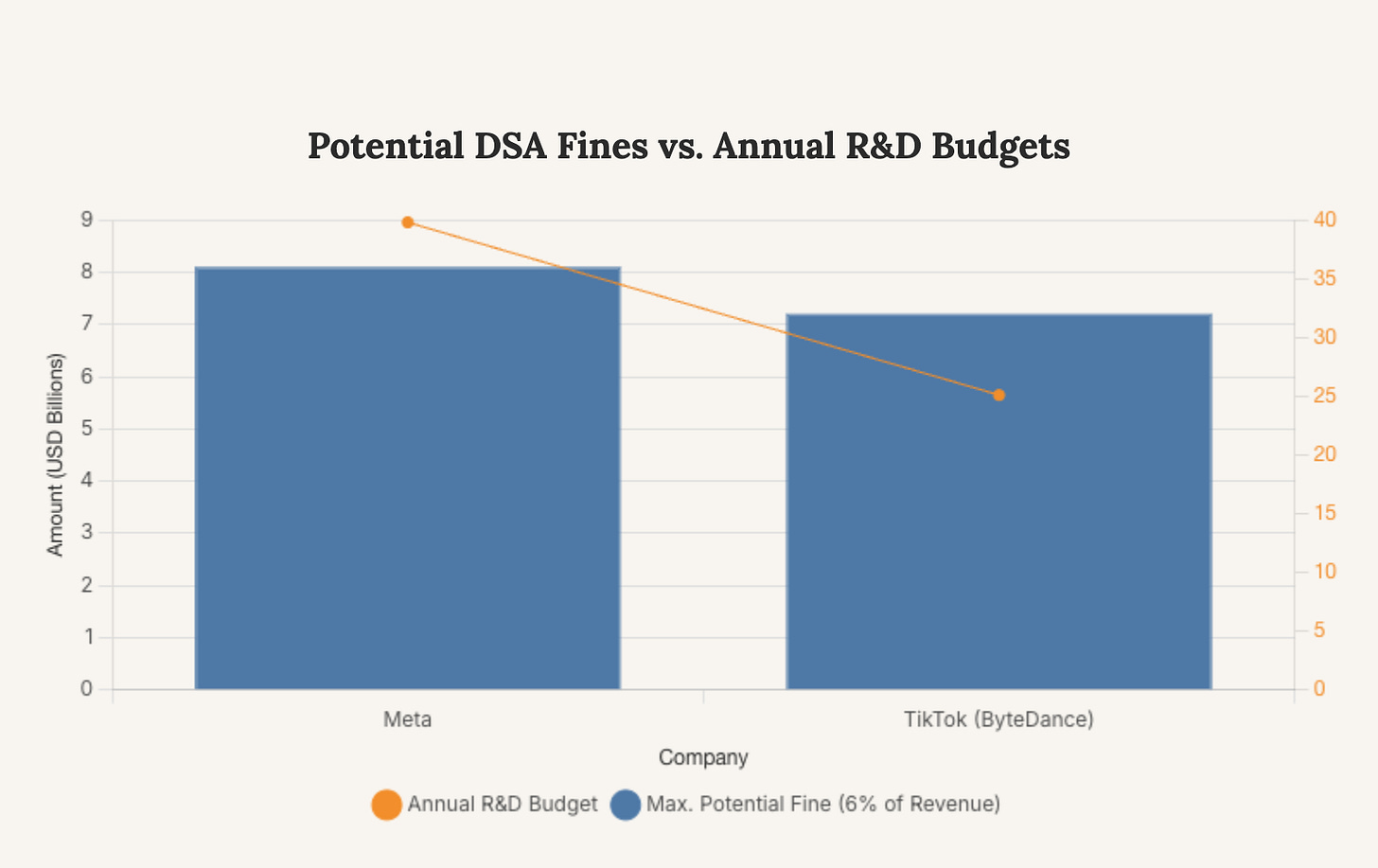

The European Commission’s case against Meta and TikTok is not a single complaint but a multi-faceted accusation of systemic failures in transparency and user protection. These alleged breaches strike at the heart of the DSA’s objective: to make platforms accountable for the societal risks their services may amplify. The potential penalties are severe, with fines that could reach up to 6% of the companies’ global annual turnover, translating into billions of dollars.

The ‘Black Box’ Problem: Obstructed Access for Researchers

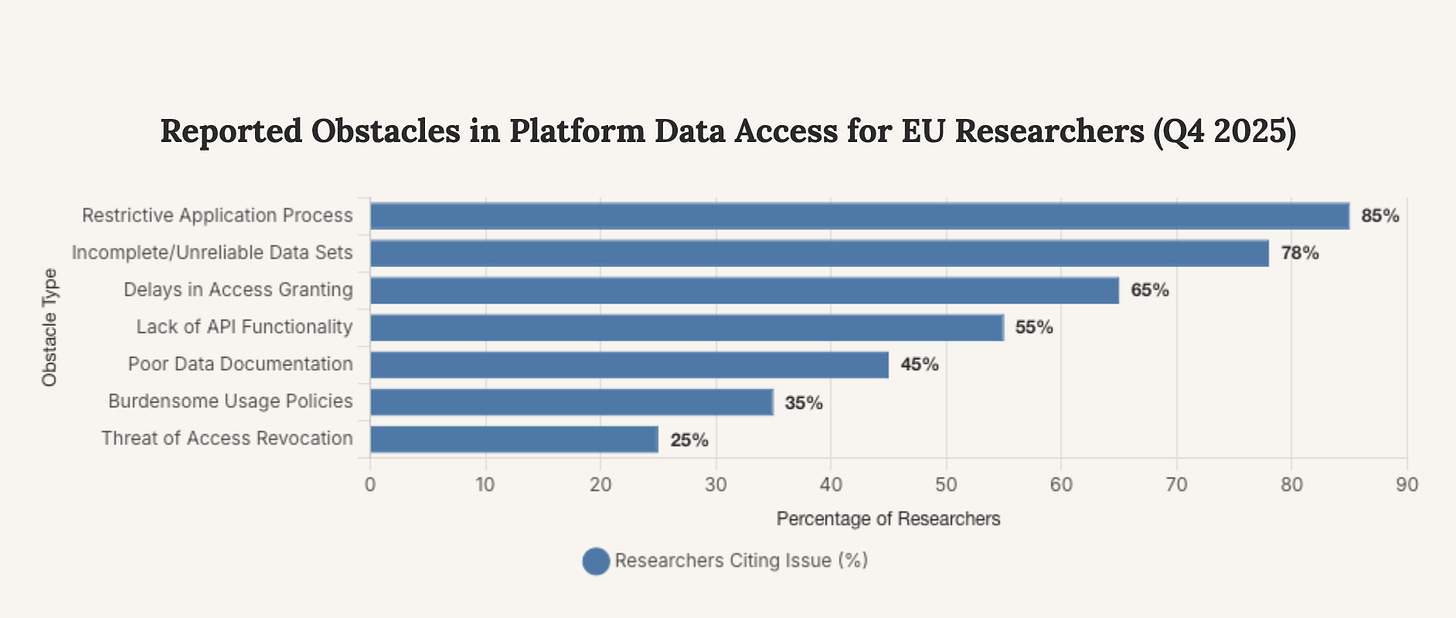

A central pillar of the DSA is the mandate for Very Large Online Platforms (VLOPs) to grant accredited researchers access to public data. This is designed to enable independent scrutiny of systemic risks like disinformation, harms to minors, and the impact of algorithms on mental health. However, the Commission’s preliminary findings conclude that both Meta and TikTok have erected significant barriers, creating “burdensome procedures and tools” that result in researchers receiving only partial or unreliable data. This alleged obstructionism is a direct challenge to the DSA’s core transparency principle.

This chart illustrates the primary hurdles faced by independent researchers in the EU when attempting to access platform data from Meta and TikTok, according to aggregated reports and complaints. The data shows that the application process itself and the quality of the data provided are the most significant barriers to effective, independent oversight as mandated by the DSA.

User Reporting and Redress: A System of ‘Dark Patterns’?

The Commission’s investigation into Meta’s platforms, Facebook and Instagram, unearthed further concerns regarding user-facing tools. The DSA requires simple and accessible mechanisms for users to report illegal content, such as hate speech or material depicting child abuse. The preliminary findings suggest Meta’s reporting systems are overly complex and may even employ “dark patterns”—deceptive interface designs that discourage users from completing reports. Furthermore, the appeals process for content moderation decisions was found to be ineffective, limiting users’ ability to provide evidence or contest removals.

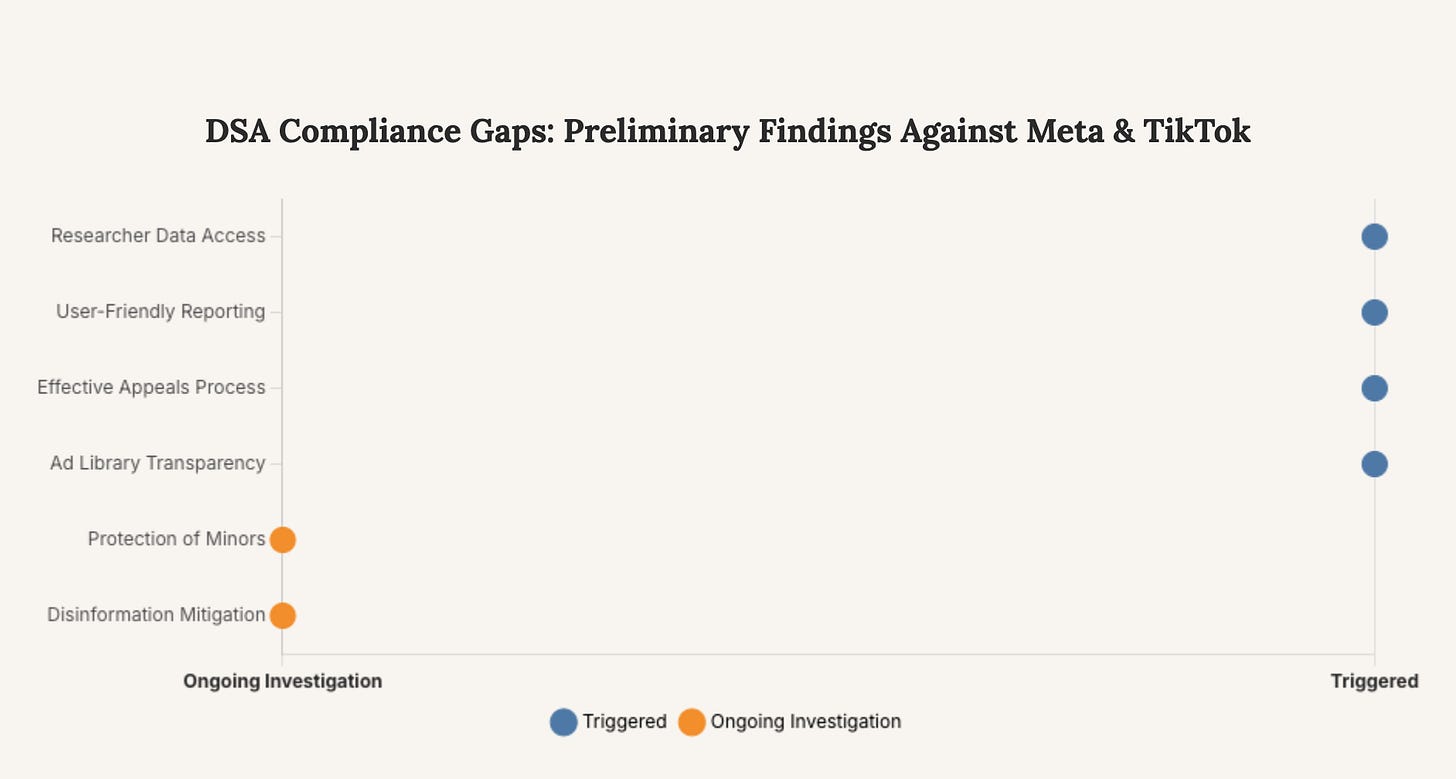

This dot plot visualizes the status of the European Commission’s proceedings. The areas where preliminary findings have officially identified breaches (Triggered) are distinguished from those still under active investigation, highlighting the specific focus of the current enforcement actions.

The Strategic Response: Diverging Defenses and a Looming Standoff

Faced with the Commission’s allegations, Meta and TikTok have adopted distinct defensive postures, revealing differing strategic calculations and setting the stage for a complex legal and political battle. Their responses offer a glimpse into the fundamental tensions between regulatory demands for transparency and the operational realities of global tech platforms.

“Our democracies depend on trust. That means platforms must empower users, respect their rights, and open their systems to scrutiny. The DSA makes this a duty, not a choice.” - Henna Virkkunen, Executive Vice-President for Tech Sovereignty, Security and Democracy

Meta’s Defense: Compliance Through Iteration

Meta has publicly disagreed with the Commission’s preliminary findings, asserting that its systems are compliant with the DSA. The company’s defense rests on the argument that it has made significant changes to its reporting, appeals, and data access tools since the DSA came into force. Meta has dedicated a team of over 1,000 people to work on DSA compliance, highlighting its investment in adapting its long-standing safety and integrity processes. This strategy positions the alleged shortcomings as part of an iterative process of alignment rather than a deliberate effort to evade regulation. However, regulators appear unconvinced that these changes go far enough to meet the law’s stringent requirements.

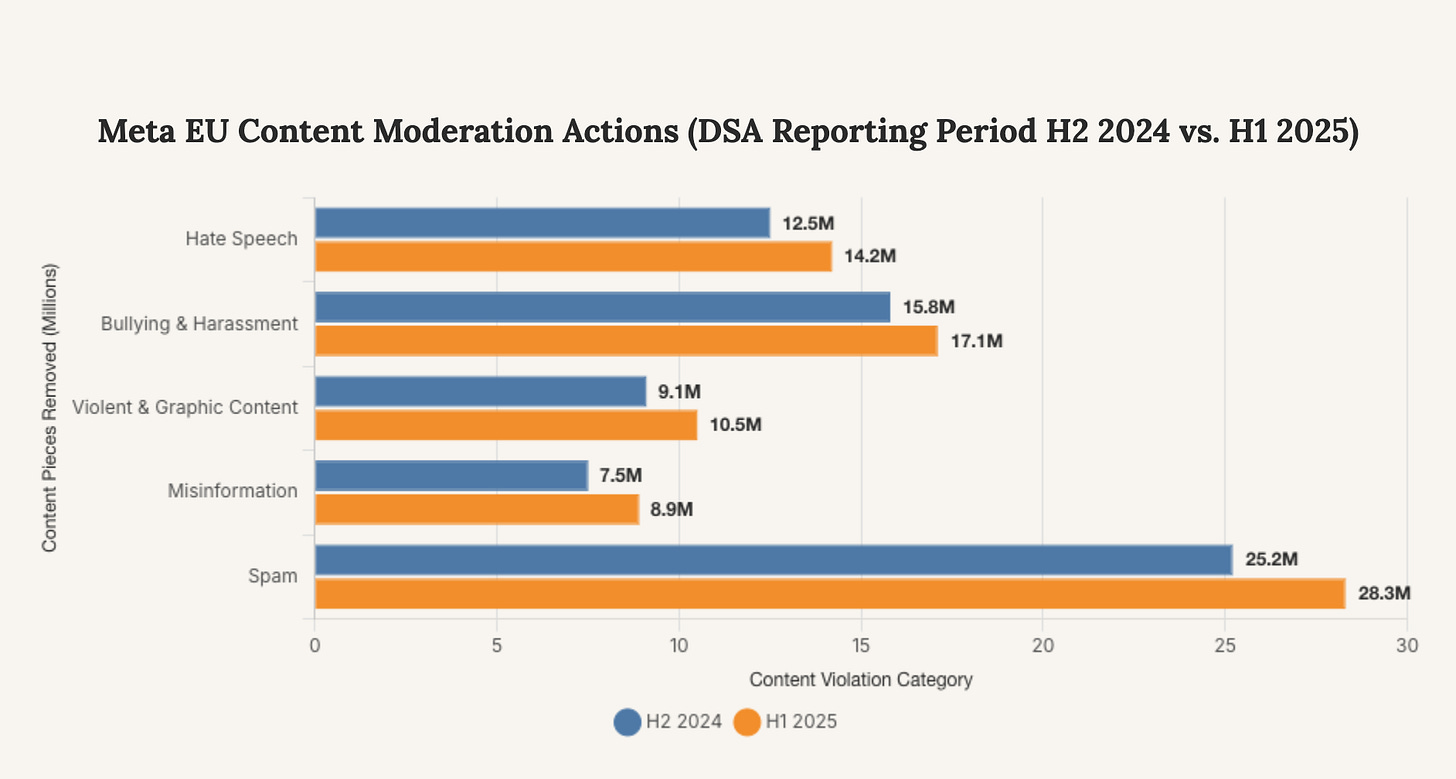

This chart compares the volume of content removed by Meta in the EU across key policy areas in two consecutive DSA reporting periods. While Meta’s own data shows an increase in enforcement, the Commission’s investigation focuses on the transparency and accessibility of the processes behind these numbers, not just the raw output.

TikTok’s Dilemma: The GDPR-DSA Conflict

TikTok’s response introduces a different, more complex legal argument. The company has stated it is “committed to transparency” but argues that the DSA’s data access requirements are in “direct tension” with the EU’s other flagship technology regulation, the General Data Protection Regulation (GDPR). TikTok has urged regulators to provide clarity on how to reconcile the demand for data sharing with strict user privacy mandates. This defense attempts to reframe the debate from one of non-compliance to one of regulatory conflict, placing the onus back on Brussels to resolve perceived contradictions in its own legal frameworks. This position also reflects broader industry concerns about navigating an increasingly dense web of digital regulations.

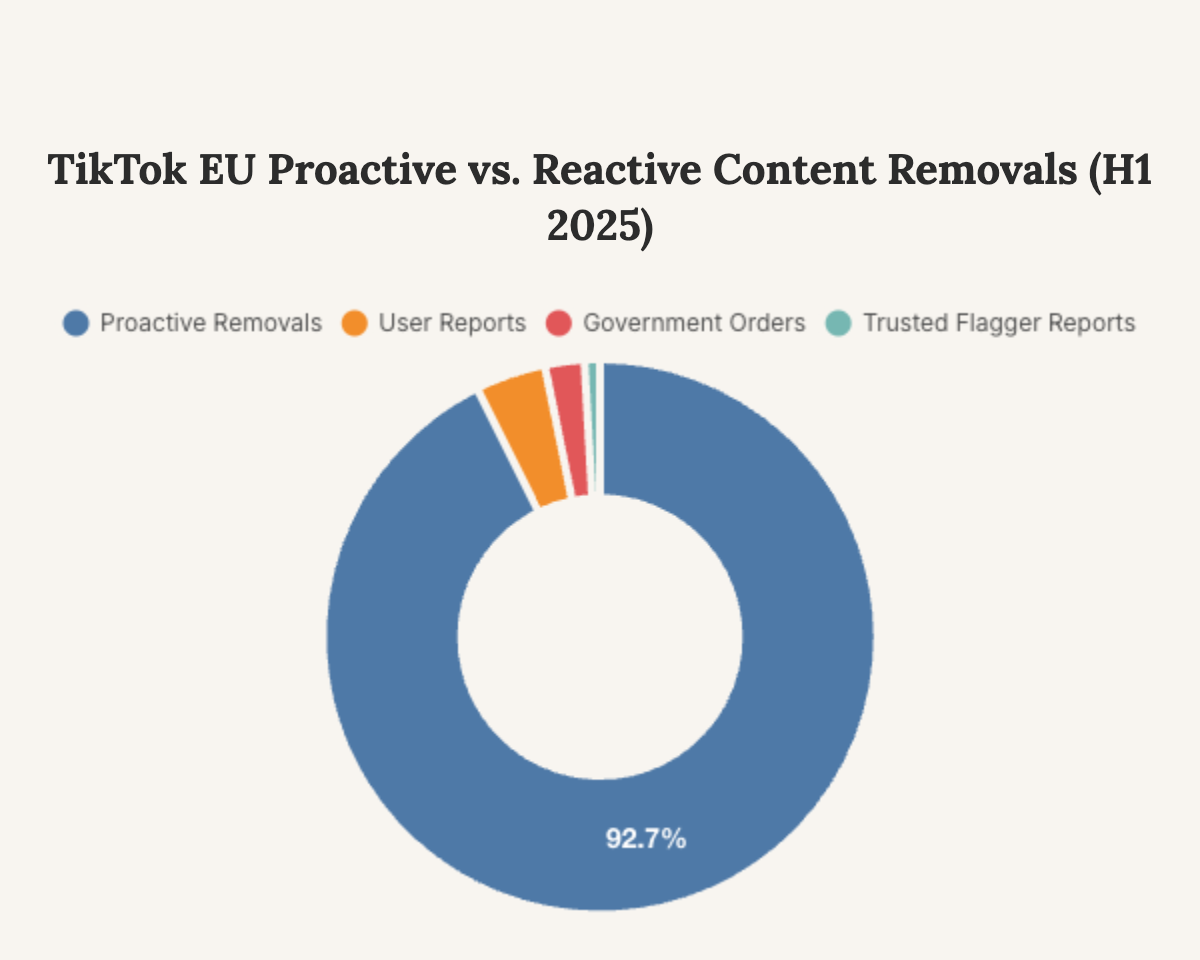

Based on TikTok’s latest DSA transparency report, this chart shows the overwhelming majority of content removals are initiated by its own proactive systems. While this indicates effective automated moderation, EU regulators are concerned about the lack of transparency in how these systems work and the difficulty for external parties to verify their accuracy and fairness.

The Ripple Effect: Strategic Implications and Future Scenarios

The European Commission’s preliminary findings against Meta and TikTok are a watershed moment. The resolution of this dispute will not only define the operational landscape for these two giants in Europe but will also send powerful signals to the entire global technology sector and regulatory bodies worldwide. The era of self-regulation is definitively over; the age of co-regulation, backed by punitive enforcement, has begun.

Scenario 1: Full Compliance and the New Operational Cost

Should the platforms concede and fully implement the changes demanded by the Commission, it will establish a new baseline for transparency. This includes building truly open and accessible data APIs for researchers and redesigning user interfaces to prioritize safety and clarity over engagement metrics. For investors, this represents a significant increase in the cost of compliance, requiring sustained investment in engineering, legal, and policy teams. Operationally, it could mean a fundamental shift in product design philosophy.

This chart contextualizes the maximum potential fines under the DSA by comparing them to the companies’ reported annual research and development expenditures. The sheer scale of the potential penalties demonstrates the EU’s leverage and underscores the financial incentive for platforms to invest heavily in compliance rather than risk enforcement.

Scenario 2: Protracted Legal Battles and Regulatory Uncertainty

If Meta and TikTok choose to challenge the findings, it could lead to years of litigation in European courts. This would create a prolonged period of regulatory uncertainty, potentially emboldening other platforms to adopt a wait-and-see approach to their own DSA compliance. This scenario would test the EU’s resolve and the efficacy of its enforcement mechanisms. For industry, it would mean navigating a fragmented and contested regulatory environment, increasing legal risk and potentially delaying the deployment of new products and features in the EU market.

“Allowing researchers access to platforms’ data is an essential transparency obligation under the DSA, as it provides public scrutiny into the potential impact of platforms on our physical and mental health.” - European Commission Statement

Scenario 3: A Negotiated Settlement and the Path of Co-Regulation

The most probable outcome is a negotiated settlement where the platforms agree to a set of binding commitments to remedy the identified breaches. This would allow the Commission to claim a significant enforcement victory while enabling the companies to avoid the maximum fines and the precedent of a formal non-compliance decision. This path would solidify the DSA’s model of co-regulation, where regulators set the framework and platforms are compelled to develop compliant solutions under the threat of severe penalties. This outcome would still necessitate significant operational changes and investment from the platforms.

This chart provides a clear visual breakdown of the specific preliminary findings against each platform. While both are accused of obstructing researcher access, the allegations against Meta are broader, currently encompassing failures in its user reporting and appeals mechanisms as well.

Forward Outlook: The Inescapable Trajectory of Transparency

Regardless of the precise outcome of these specific proceedings, the strategic trajectory is clear. The EU has established a new global standard for platform accountability, and the political will to enforce it is evident. The actions against Meta and TikTok are not an isolated event but the opening salvo in a sustained campaign to bring systemic transparency to the digital sphere. Other VLOPs are undoubtedly watching closely, aware that they could be next in the Commission’s sights.

For stakeholders, the key takeaway is that transparency is no longer a corporate social responsibility initiative but a core, legally-mandated operational requirement in major markets. The financial and reputational risks of non-compliance are now too significant to ignore. The platforms that thrive in this new environment will be those that integrate regulatory compliance and radical transparency into their core product and engineering philosophies, rather than treating them as an external constraint.

The true cost of operating a global digital platform now includes the non-negotiable price of systemic transparency.