The 945 Terawatt-Hour Question: AI’s Soaring Energy Demand Is About to Reshape Global Power

An in-depth analysis of the escalating climate cost of artificial intelligence and the high-stakes race for sustainable computing.

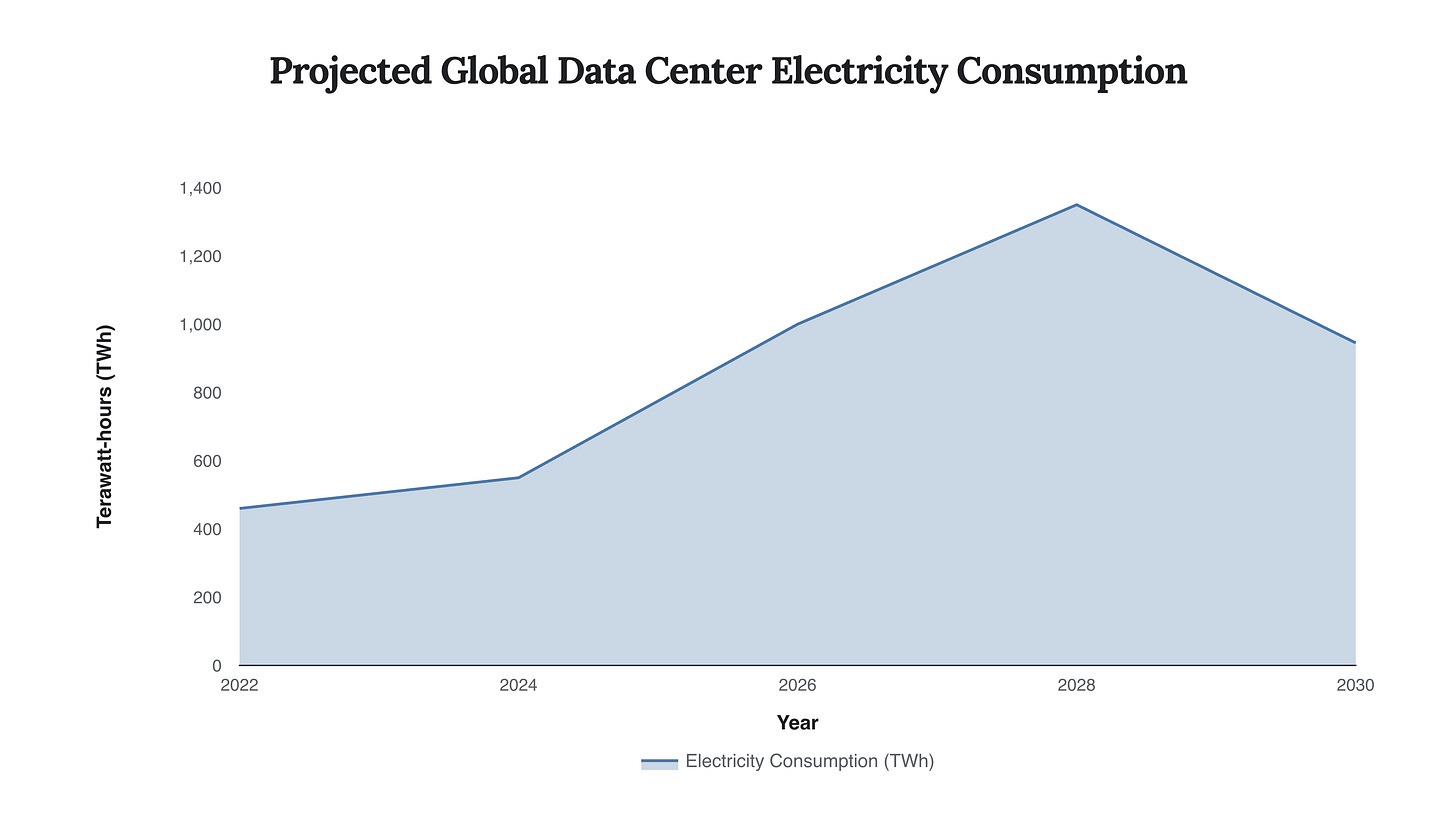

The artificial intelligence revolution, for all its promised efficiencies and advancements, is built on a foundation of rapidly escalating energy consumption. The sheer computational power required to train and operate today’s sophisticated AI models carries a significant and growing climate cost. According to a recent report from the International Energy Agency (IEA), global electricity demand from data centers is projected to more than double by 2030, reaching approximately 945 terawatt-hours—an amount roughly equivalent to the entire electricity consumption of Japan.

This surge is not a distant forecast; it’s a present and accelerating reality that poses a direct challenge to global climate goals and strains existing power grids. This briefing deconstructs the multifaceted environmental impact of AI’s computational appetite, from the carbon footprint of model training to the vast water resources consumed for cooling, and explores the emerging strategies to mitigate this high-stakes challenge.

The Unchecked Growth of AI’s Energy Appetite

The core of AI’s environmental challenge lies in the exponential growth of its energy demands. This is not a linear increase but a compounding one, driven by the race for ever-larger and more powerful models. The hardware that powers this revolution, particularly high-performance GPUs, consumes vast amounts of electricity, and the facilities that house them—sprawling data centers—require constant cooling, further adding to their energy load.

Data Centers: The Epicenter of Consumption

Data centers are the physical heart of the AI boom, and their energy consumption is staggering. In 2023, the data centers of major tech companies like Google and Microsoft already consumed more electricity than entire countries such as Jordan and Ghana. This demand is set to intensify dramatically. In the United States alone, data centers are projected to account for almost half of the growth in electricity demand between now and 2030. This rapid expansion is putting immense pressure on electrical grids, with some regions already experiencing strain. Northern Virginia, a major hub for data centers, is a case in point, where the rapid clustering of these facilities is straining local infrastructure.

Caption: This chart illustrates the projected surge in global electricity consumption by data centers, with a significant acceleration attributed to the demands of AI.

Training vs. Inference: The Two Fronts of AI’s Carbon Footprint

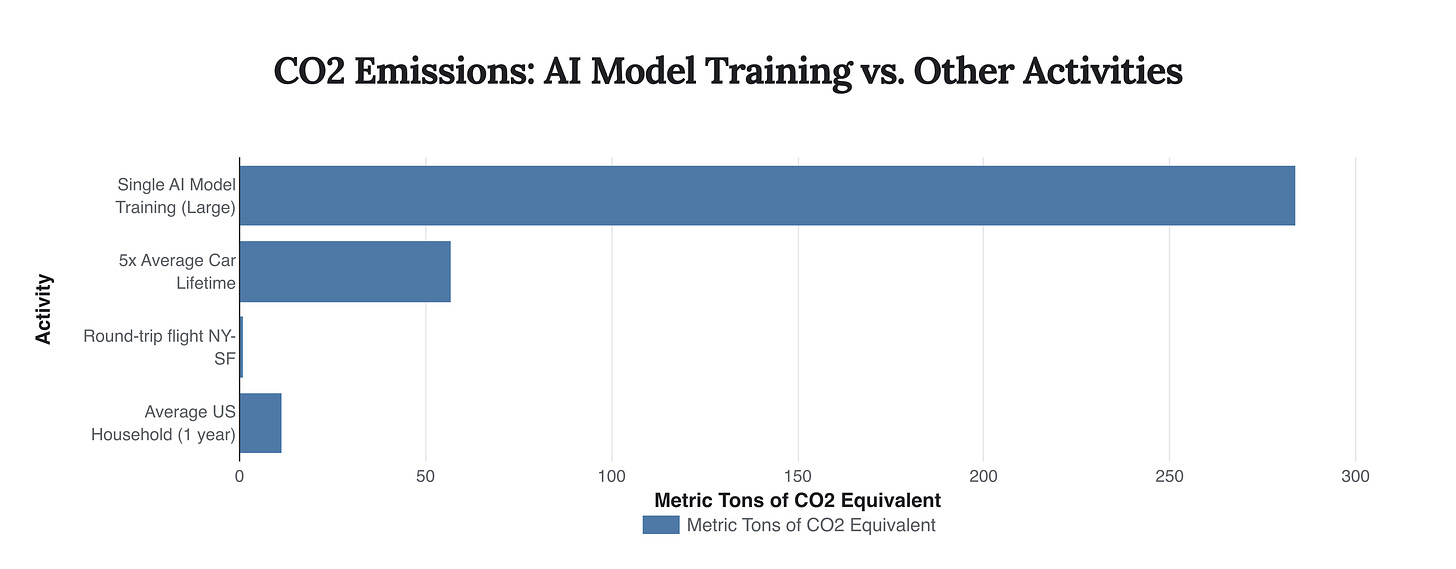

The environmental impact of AI can be broken down into two primary phases: training and inference. Training a large language model is an incredibly energy-intensive, one-time process. Research has shown that training a single large AI model can emit more carbon than five cars in their entire lifetimes. For example, the training of GPT-3 is estimated to have consumed 1,287 MWh of electricity, resulting in emissions of 552 metric tons of CO2 equivalent. While the energy cost per query (inference) is significantly lower, the sheer volume of interactions with popular AI models means that the cumulative energy consumption of the inference phase can also be substantial.

Caption: This chart provides a stark comparison of the carbon dioxide emissions from training a single large AI model against other common activities, highlighting the significant environmental cost of model development.

The Hidden Costs: Water Consumption and E-Waste

Beyond electricity consumption and carbon emissions, the AI industry’s environmental footprint has other, less visible dimensions. The vast quantities of water required for cooling data centers and the growing problem of electronic waste from rapidly obsolete hardware are significant secondary concerns.

AI’s Thirst for Water

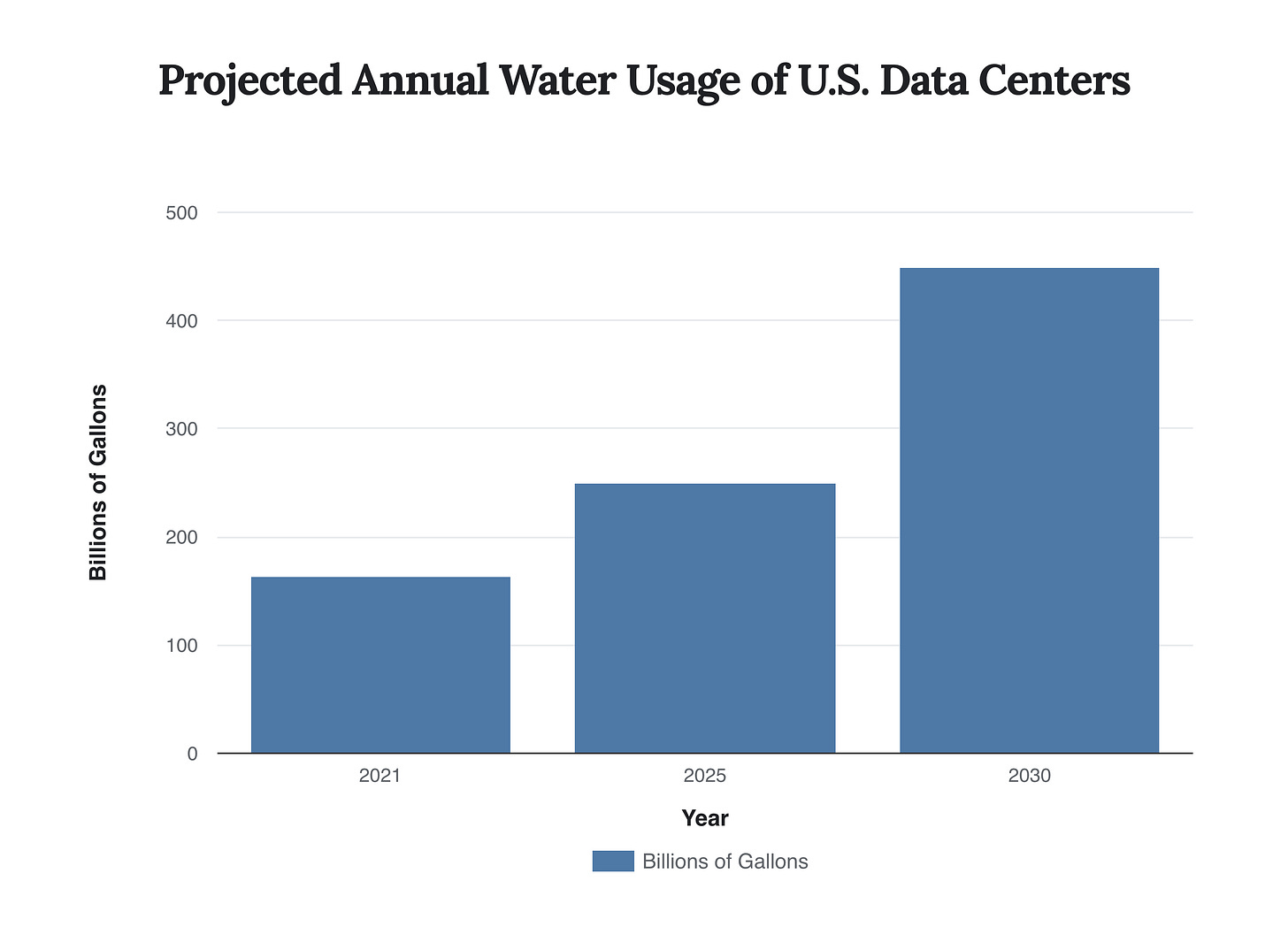

Data centers are not just energy-hungry; they are also incredibly thirsty. Large data centers can consume up to 5 million gallons of water per day, equivalent to the daily water usage of a small city. This water is primarily used for cooling the servers that run AI models. As the demand for AI grows, so does the strain on local water resources, a particularly acute problem in water-stressed regions. It’s estimated that a session of 20-50 queries with a model like ChatGPT can consume around 500 milliliters of water.

“Just because this is called ‘cloud computing’ doesn’t mean the hardware lives in the cloud. Data centers are present in our physical world, and because of their water usage they have direct and indirect implications for biodiversity.”

Caption: This chart illustrates the projected increase in annual water consumption by data centers in the United States, a hidden environmental cost of the AI boom.

The E-Waste Challenge

The rapid pace of innovation in AI hardware leads to a short shelf-life for expensive and resource-intensive components like GPUs. This contributes to the growing global problem of electronic waste. The manufacturing of this hardware also has a significant environmental impact, involving the extraction of raw materials and the use of toxic chemicals. The increasing demand for generative AI applications is spurring a surge in the production and shipment of high-performance computing hardware, further exacerbating these indirect environmental impacts.

The Path to Sustainable AI: A Multi-Pronged Approach

Addressing the environmental impact of AI requires a concerted effort from researchers, developers, and policymakers. The path forward involves a combination of technological innovation, strategic deployment, and a commitment to transparency.

Technological and Algorithmic Efficiency

One of the most promising avenues for reducing AI’s environmental impact is through the development of more efficient algorithms and hardware. Techniques like model pruning, quantization, and knowledge distillation can significantly reduce the size and computational requirements of AI models without a substantial loss in accuracy. On the hardware front, companies like AMD and Intel are focused on improving the energy efficiency of their processors and developing advanced cooling solutions.

The Role of Renewable Energy

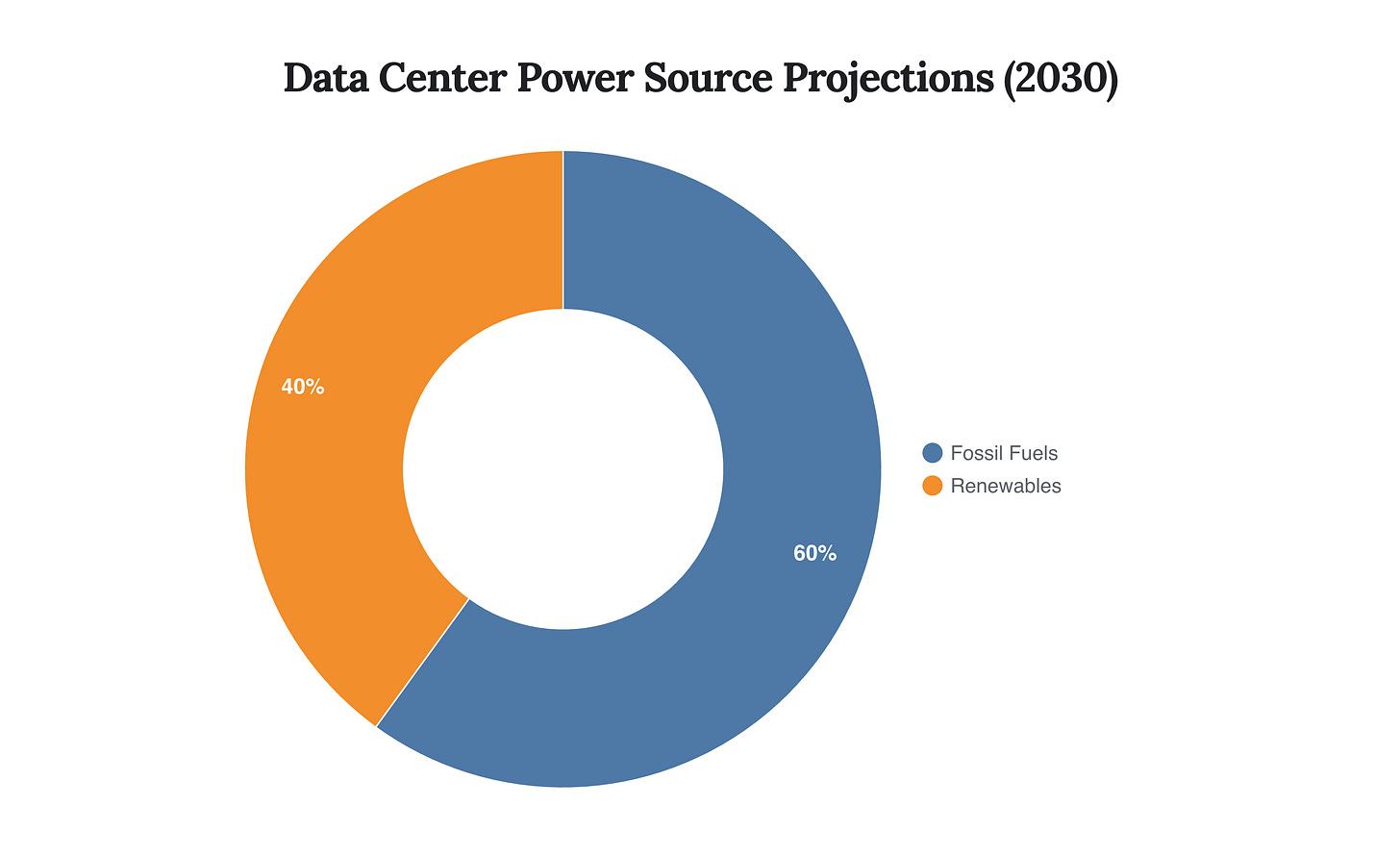

Transitioning data centers to renewable energy sources is a critical step in decarbonizing the AI industry. Many major tech companies are investing heavily in renewable energy to power their operations. Furthermore, strategically scheduling AI workloads to coincide with periods of high renewable energy availability can reduce emissions by 30-40% without any changes to the underlying technology.

“Global electricity demand from data centres is set to more than double over the next five years, consuming as much electricity by 2030 as the whole of Japan does today.” - Fatih Birol, IEA Executive Director

Caption: This chart projects the likely energy sources for the increased electricity demand from data centers by 2030, with a significant portion still reliant on fossil fuels.

The Double-Edged Sword: AI for Climate Solutions

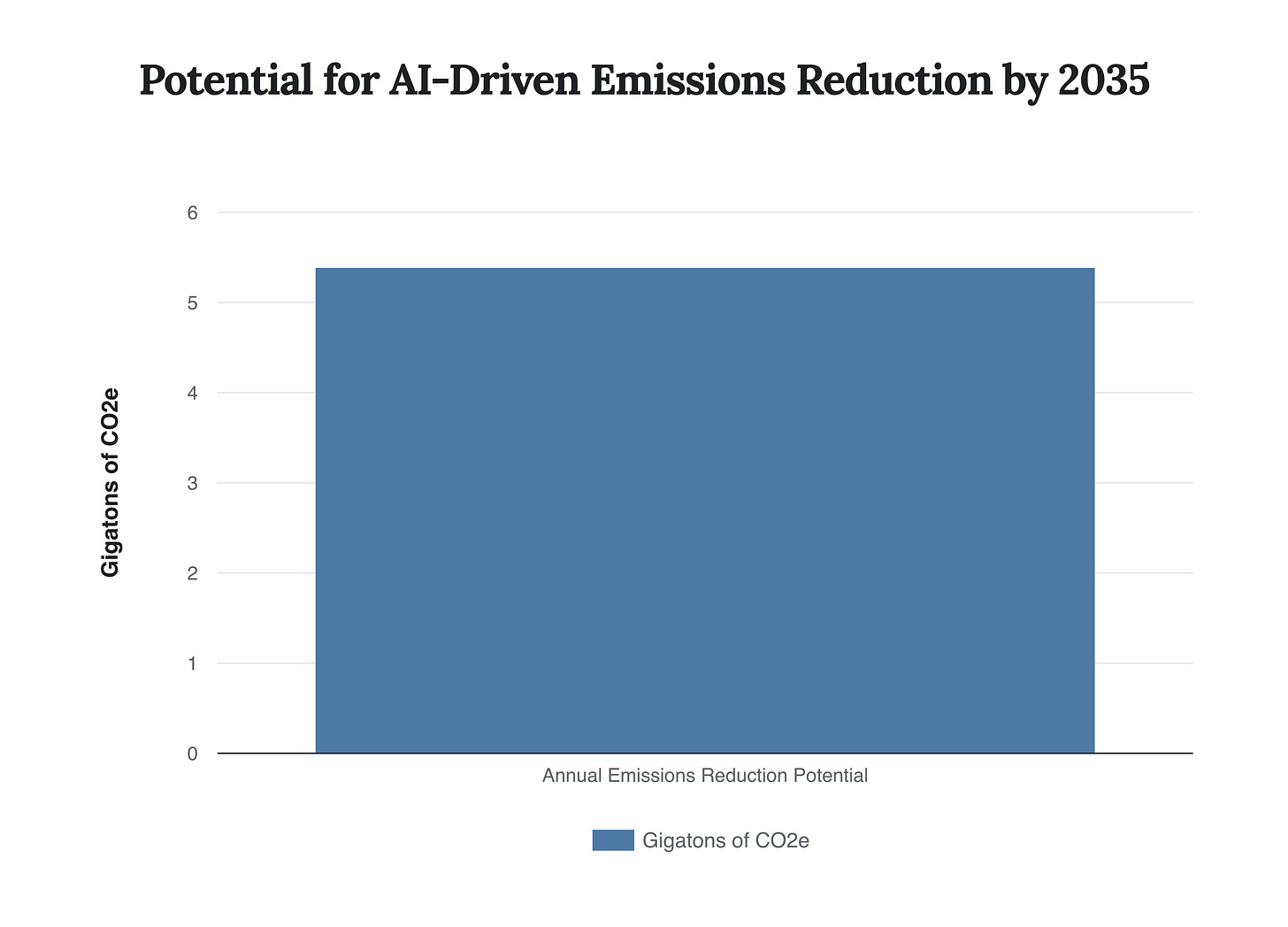

While AI is a significant contributor to energy consumption, it also holds immense potential as a tool for climate change mitigation. AI is being used to improve climate modeling and prediction, optimize energy grids for renewable energy integration, and enhance the efficiency of industrial processes. For example, AI algorithms can predict wind turbine electricity generation, reducing the reliance on fossil fuel backups. AI is also being leveraged for sustainable agriculture by optimizing planting and irrigation schedules. The key challenge is to ensure that the environmental benefits of these applications outweigh the carbon cost of the underlying AI infrastructure.

Caption: Research suggests that by 2035, AI could reduce global emissions by 5.4 GtCO₂e annually, potentially outweighing its own energy consumption.

Strategic Foresight and Concluding Insights

The trajectory of AI’s energy consumption presents a critical juncture for industry leaders, investors, and policymakers. The unchecked growth of AI’s computational demands is on a collision course with global climate targets. However, the narrative is not solely one of environmental peril. The same technological prowess that drives AI’s energy consumption can be harnessed to create a more sustainable future.

The coming years will be defined by a race to innovate and implement sustainable AI practices. Companies that successfully navigate this transition will not only mitigate their environmental impact but also gain a significant competitive advantage. The winners will be those who can optimize for both performance and efficiency, leveraging renewable energy and pioneering new, less resource-intensive AI architectures.

Investors should look for companies that are transparent about their energy consumption and are actively investing in sustainable AI solutions. Policymakers have a crucial role to play in incentivizing the development and adoption of green computing technologies and in ensuring that the growth of the AI industry is aligned with national and international climate commitments.

The dual nature of AI as both a climate challenge and a climate solution is the central paradox of our time. The ultimate impact of this transformative technology will depend on the choices we make today.

The future of AI is not just about building more powerful models; it’s about building them responsibly, with a clear-eyed understanding of their environmental cost and a steadfast commitment to a sustainable technological future.