The $67 Billion Feedback Loop: Why 80% of Modern Discovery is Killing Innovation?

The Decline of Serendipity: When Algorithms Kill Surprise

Opening Synthesis: The era of stumbling upon the unexpected is over. In its place, we have built a high-efficiency engine of confirmation bias that is actively eroding the foundations of creativity and commerce. In 2024, the strategic landscape of content consumption is defined by a single, overwhelming statistic: 80% of what humanity watches on its leading streaming platform is now dictated by an algorithm, not human choice. While this shift was sold as a triumph of personalization, fresh data reveals a darker reality: a “surpriseless” economy where collective novelty is crashing, cultural products are becoming mathematically identical, and the cost of this “blind spot”—manifested in hallucinations, errors, and innovation decay—has reached an estimated $67.4 billion annually. We are no longer exploring; we are being fed. This briefing dissects the mechanical death of serendipity and forecasts the inevitable rise of a premium market for ‘inefficient’ human discovery.

1. The Algorithmic Stranglehold: The Mechanics of the 80%

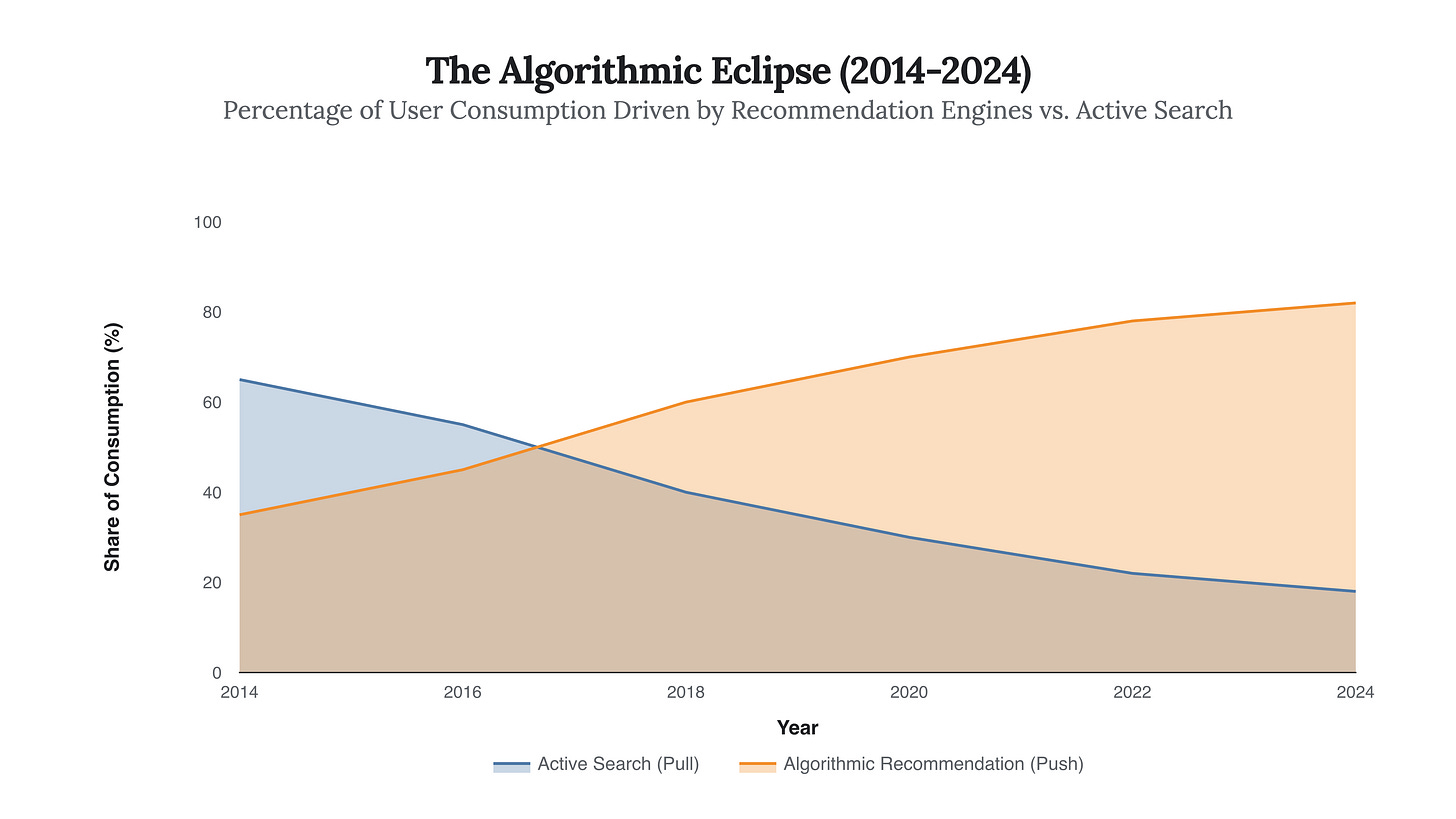

The transition from the “Search Era” (2000–2015) to the “Feed Era” (2016–Present) has fundamentally altered the physics of information discovery. Previously, discovery was an active “pull” mechanism—users queried databases and browsed aisles. Today, it is a passive “push” mechanism. Netflix reports that over 80% of content streamed on its platform is discovered through its recommendation engine, leaving only 20% to active search. Spotify trails closely, with algorithmic playlists mediating over 62% of music discovery as of 2020, a number that has likely risen with the introduction of ‘Smart Shuffle’ and AI DJs.

The 40-Minute Event Horizon

The speed at which these systems enclose users in a “relevance loop” has accelerated dramatically. New analysis of TikTok’s recommendation architecture reveals an “interest latency” of just 40 minutes. Within less than an hour of engagement, a new user’s feed becomes effectively calcified around a narrow set of interests, excluding 90% of unrelated content. This is not just personalization; it is isolation.

Fig 1.1: The “Push” economy has nearly inverted the discovery model in a decade. As the red area (Algorithmic Push) consumes the chart, the mathematical probability of a user encountering “unrelated” or serendipitous content approaches zero.

2. The Homogenization Crisis: When Everything Sounds the Same

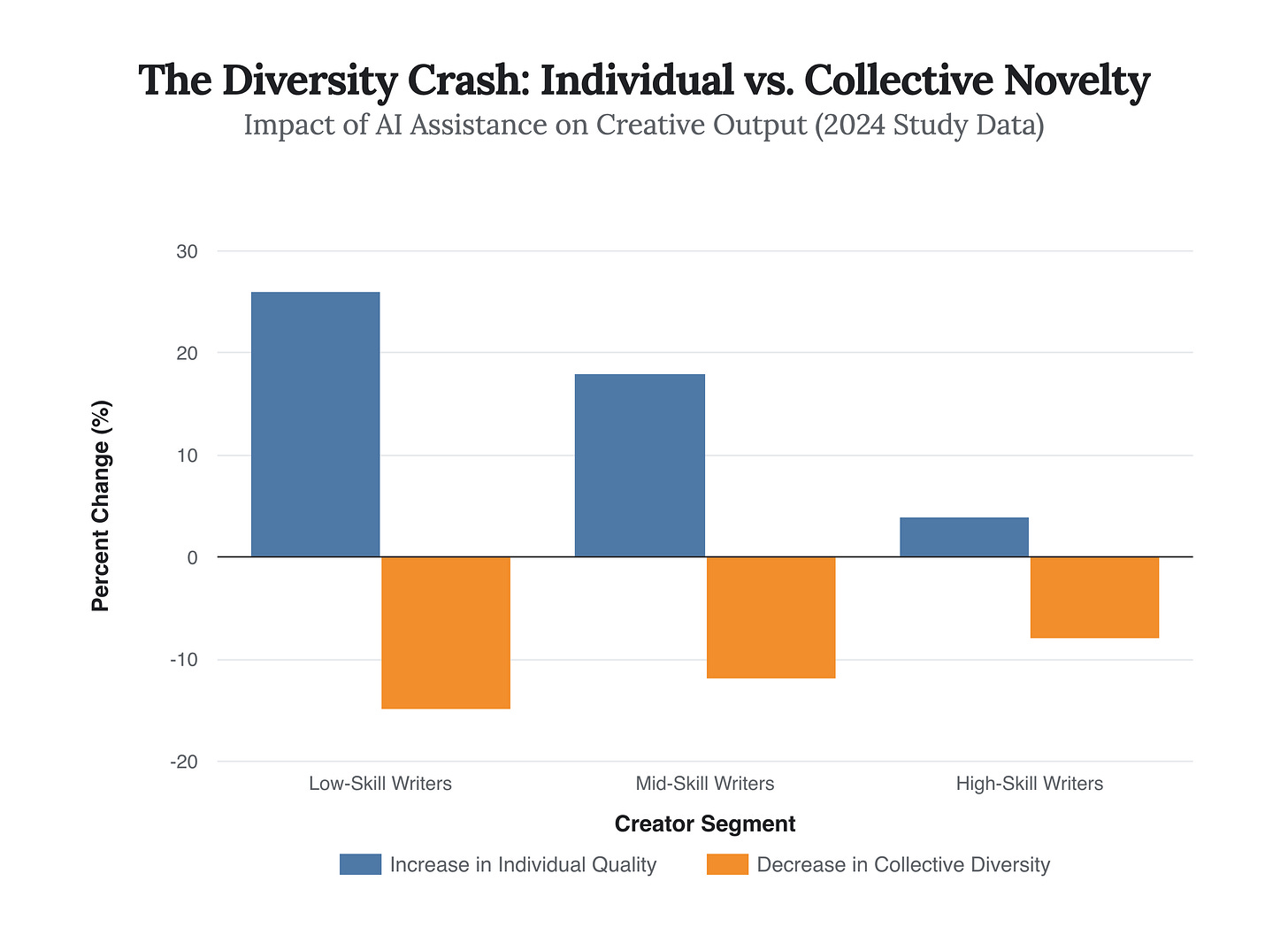

The second-order effect of this efficiency is a profound flattening of culture. Algorithms are optimization functions; they are designed to maximize engagement by serving what has already worked. This creates a feedback loop that punishes risk and rewards conformity. A landmark July 2024 study published in Science Advances quantified this “creativity paradox.” Researchers found that while access to AI tools increased individual creativity (making bad writers average), it significantly reduced collective diversity. The stories produced became structurally and thematically similar, creating a “grey goo” of content.

The “Blockbuster” Effect

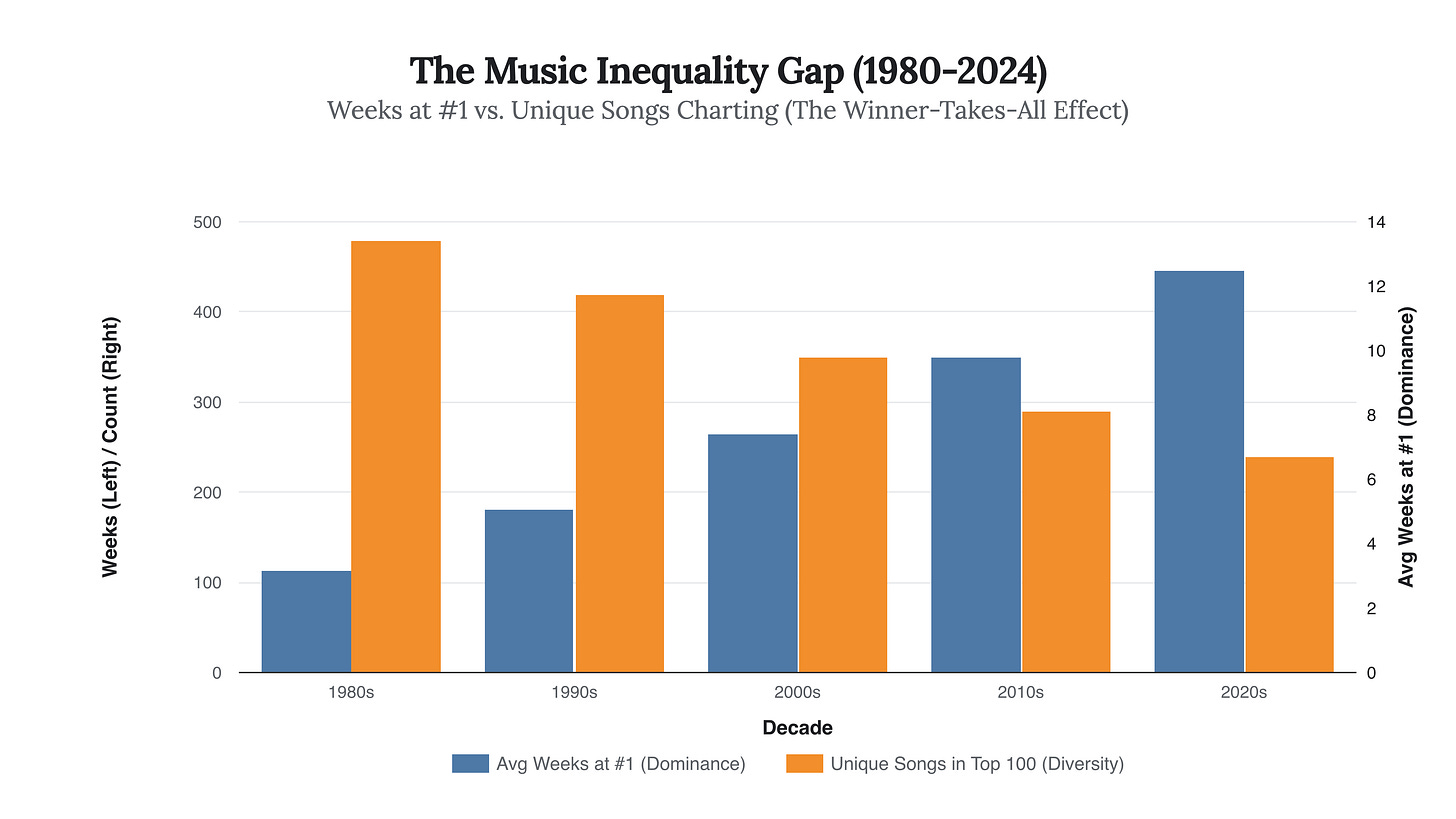

This homogenization is visible in hard market data. In the music industry, the “winner-take-all” dynamic has intensified. Historical analysis of the Billboard Hot 100 shows that while the turnover of the bottom 90% of songs has increased (the “fast fashion” of music), the lifespan of the Number 1 hit has tripled since the 1960s. The algorithms reinforce the “head” of the power law curve at the expense of the “tail,” effectively killing the mid-market where innovation typically incubates.

Fig 2.1: The “Creativity Trap.” While AI tools (and by extension, curatorial algorithms) raise the floor of quality (blue bars), they lower the ceiling of diversity (red line). We are trading brilliance for consistency.

3. The Economic Cost: The $67 Billion Fatigue

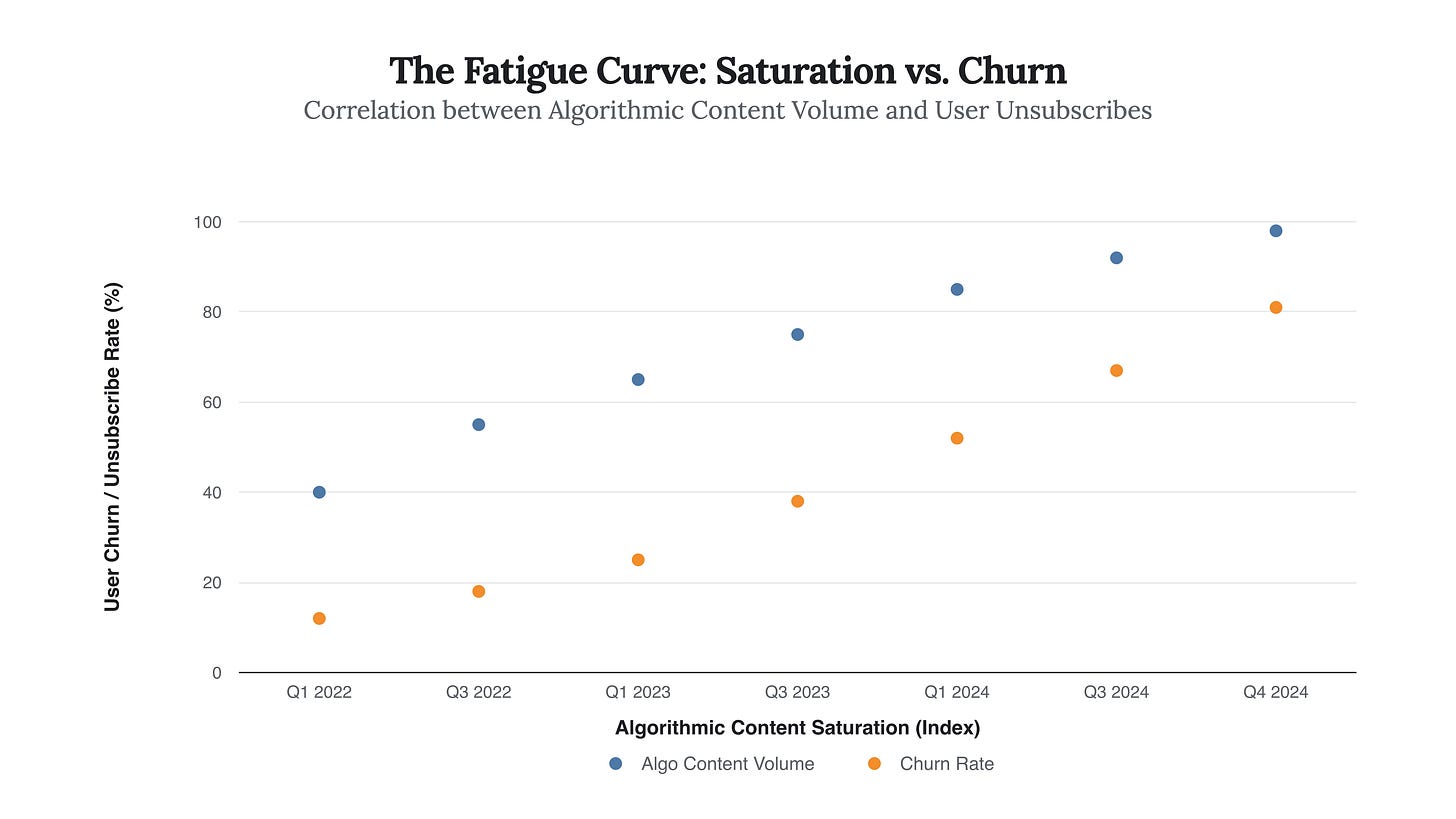

The strategic question is: does this matter for the bottom line? The answer is a resounding yes. The loss of serendipity is not just a philosophical problem; it is a retention crisis. “Marketing fatigue” is the new churn driver. Recent reports indicate that 81% of consumers have unsubscribed from brands due to excessive or repetitively irrelevant messaging, and 67% of consumers reached a breaking point of “fatigue” by late 2024.

Furthermore, the reliance on automated systems has introduced a massive “Reliability Tax.” Organizations are spending an estimated $67.4 billion globally to mitigate the effects of AI hallucinations, errors, and the poor decision-making that stems from algorithmic echo chambers. When discovery is automated, errors propagate at the speed of software.

Fig 3.1: The limit of efficiency. As platforms maximize algorithmic saturation (x-axis), they hit a wall of human fatigue (y-axis), leading to a sharp increase in churn. The correlation suggests we have passed the point of diminishing returns for purely algorithmic curation.

4. Strategic Foresight: The Rise of ‘Synthetic Serendipity’

We are entering a new phase of the digital economy where “friction” and “inefficiency”—in the form of human curation and random discovery—will become premium luxury goods. The “so what?” for investors and strategists is clear: the platforms that can successfully re-introduce surprise will win the next decade of retention wars.

Key Predictions for 2025-2027:

The “Randomness” API: Major platforms will introduce adjustable “serendipity sliders” (e.g., Spotify’s “Smart Shuffle” evolving into “Surprise Me” modes) that deliberately inject low-relevance items to break local maximums.

Human Curation as a Luxury Service: Just as “hand-made” became a premium label in manufacturing, “human-curated” will become the premium tier in media. We expect a resurgence of newsletter bundlers, boutique tastemakers, and “slow media” aggregators who charge for the privilege of not using an algorithm.

The Innovation Divergence: Companies that rely solely on AI-driven market research will converge on identical product ideas (the “grey goo” effect). The biggest alpha will be found by firms using contrarian, non-data-driven signals to identify “long tail” opportunities that algorithms miss by design.

Fig 4.1: The visual signature of cultural stagnation. As the “head” of the curve (blue bars) gets stronger due to algorithmic reinforcement, the total diversity of the ecosystem (red line) collapses.

Concluding Insight: The algorithm promised to show us what we wanted. In doing so, it hid everything we didn’t know we needed. The cost of this trade-off is no longer theoretical—it is a multi-billion dollar drag on innovation and a retention poison pill. The next unicorn will not be built on better prediction, but on the strategic reintroduction of surprise.

“We are building a world where everyone sees a different world, but those worlds are increasingly made of the same gray bricks. True innovation requires the inefficiency of stumbling in the dark.”

Regarding the topic, this article is so insightful. I often feel this 'algorithmic stranglehold' even with books. My recommended reading list becomes so predictable, lacking genuine novelty. It makes you wonder how much we miss. We need more real human-driven disscovery.