The $38 Billion Breakup: How OpenAI’s AWS Alliance Shattered Microsoft’s AI Exclusivity

A deep dive into the end of the cloud monogamy era and the dawn of the multi-polar AI compute war

The End of an Era: Deconstructing the AI Cold War’s Biggest Realignment

The tectonic plates of the artificial intelligence landscape have just violently shifted. In a move that effectively ends the era of cloud exclusivity for frontier AI models, OpenAI has entered into a monumental $38 billion, multi-year strategic partnership with Amazon Web Services (AWS). Announced in early November 2025, this agreement dismantles the long-held assumption that OpenAI’s destiny was inextricably linked to Microsoft Azure.

For industry leaders, investors, and policymakers, this is not merely a vendor change; it is a fundamental realignment of the AI power structure, signaling the start of a multi-cloud, multi-polar compute war. This briefing deconstructs the strategic calculus behind this decision, maps the new competitive battlefield, and provides a forward-looking analysis of the second-order effects that will ripple through the ecosystem.

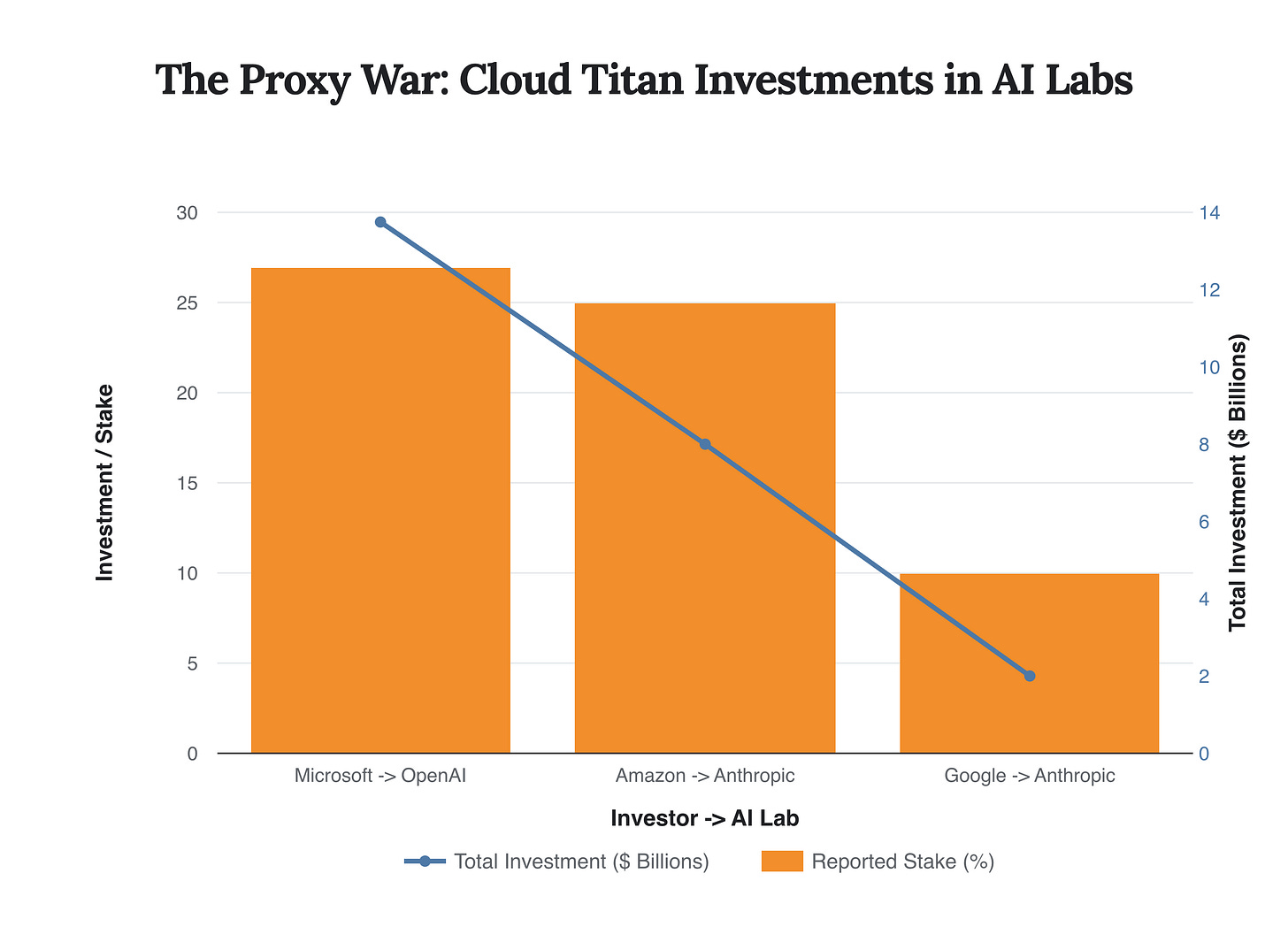

For years, the narrative was simple: Microsoft and OpenAI were the undisputed power couple of the AI revolution, cemented by over $13 billion in investment and a deeply integrated partnership that made Azure the de facto home of ChatGPT and GPT-4. Amazon’s primary countermove was a significant, multi-billion dollar investment in rival AI lab Anthropic, positioning its Claude model as the premier alternative on AWS. This created a bipolar world. That world is now history. The OpenAI-AWS alliance is a declaration of independence for OpenAI and a massive strategic victory for Amazon, instantly repositioning AWS at the heart of the generative AI boom and challenging Microsoft’s grip on the most valuable AI workloads on the planet.

The Anatomy of a Megadeal

The scale of the agreement is staggering, reflecting the insatiable demand for computing power required to train and run next-generation AI. Under the seven-year deal, OpenAI will gain immediate access to AWS’s world-class infrastructure, specifically clusters of hundreds of thousands of NVIDIA’s most advanced GPUs, including the GB200 and GB300 series, linked via Amazon’s high-performance EC2 UltraServers. This raw power is essential not just for running current services like ChatGPT but for training the frontier models of tomorrow.

The strategic implications are profound. For OpenAI, this is a calculated move to de-risk its most critical supply chain: compute. Reports of capacity constraints on Azure likely accelerated the need for diversification. By establishing a multi-cloud strategy with AWS as a co-equal pillar to Azure, OpenAI gains resilience, negotiating leverage, and access to a broader ecosystem of innovation. For AWS, it’s a coup. After facing questions about its position in the generative AI race, this deal firmly re-establishes its infrastructure dominance and captures a significant share of the most important workload of the next decade, a fact reflected in the nearly 5% jump in Amazon’s stock price following the announcement.

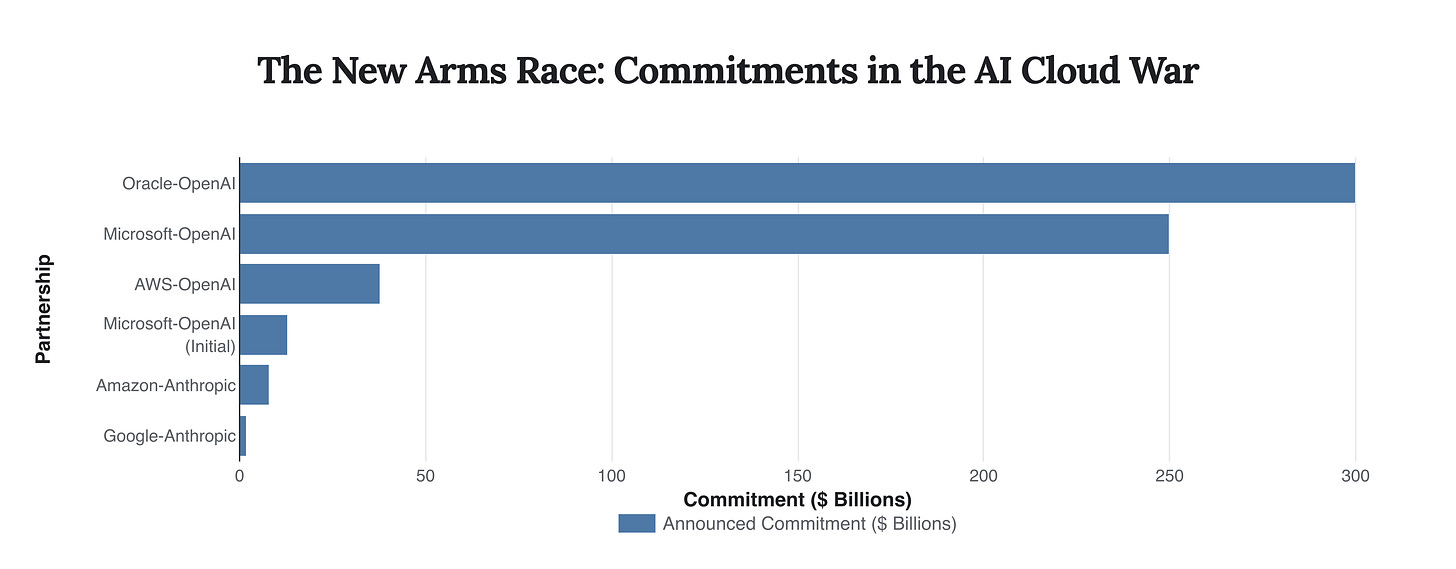

This chart visualizes the massive capital commitments defining the AI infrastructure landscape. While historical investments were significant, the new wave of multi-billion dollar deals, including OpenAI’s massive new commitments with both Microsoft and Oracle alongside AWS, signals a dramatic escalation in the competition for compute resources.

Mapping the New Battlefield: A Multi-Polar Cloud War

The OpenAI-AWS deal was not born in a vacuum. It is the culmination of escalating tensions and strategic maneuvering among the cloud hyperscalers. The era of simple, monogamous partnerships is over, replaced by a complex web of alliances, investments, and rivalries that define the new competitive landscape.

From Bipolar to Multi-polar

The AI infrastructure world was previously characterized by a clear divide. Microsoft had OpenAI; AWS had Anthropic. Google, while a formidable AI player with its DeepMind division and Gemini models, was often seen as a distinct third pole. This structure is now fracturing. OpenAI is aggressively pursuing a multi-cloud strategy, adding AWS to existing smaller agreements with Google Cloud and Oracle. This shift forces enterprises to re-evaluate their own cloud strategies. The decision is no longer which AI camp to join, but how to build a multi-cloud strategy that leverages the best models, regardless of where they are hosted.

“Scaling frontier AI requires massive, reliable compute. Our partnership with AWS strengthens the broad compute ecosystem that will power this next era and bring advanced AI to everyone.”

- Sam Altman, CEO, OpenAI

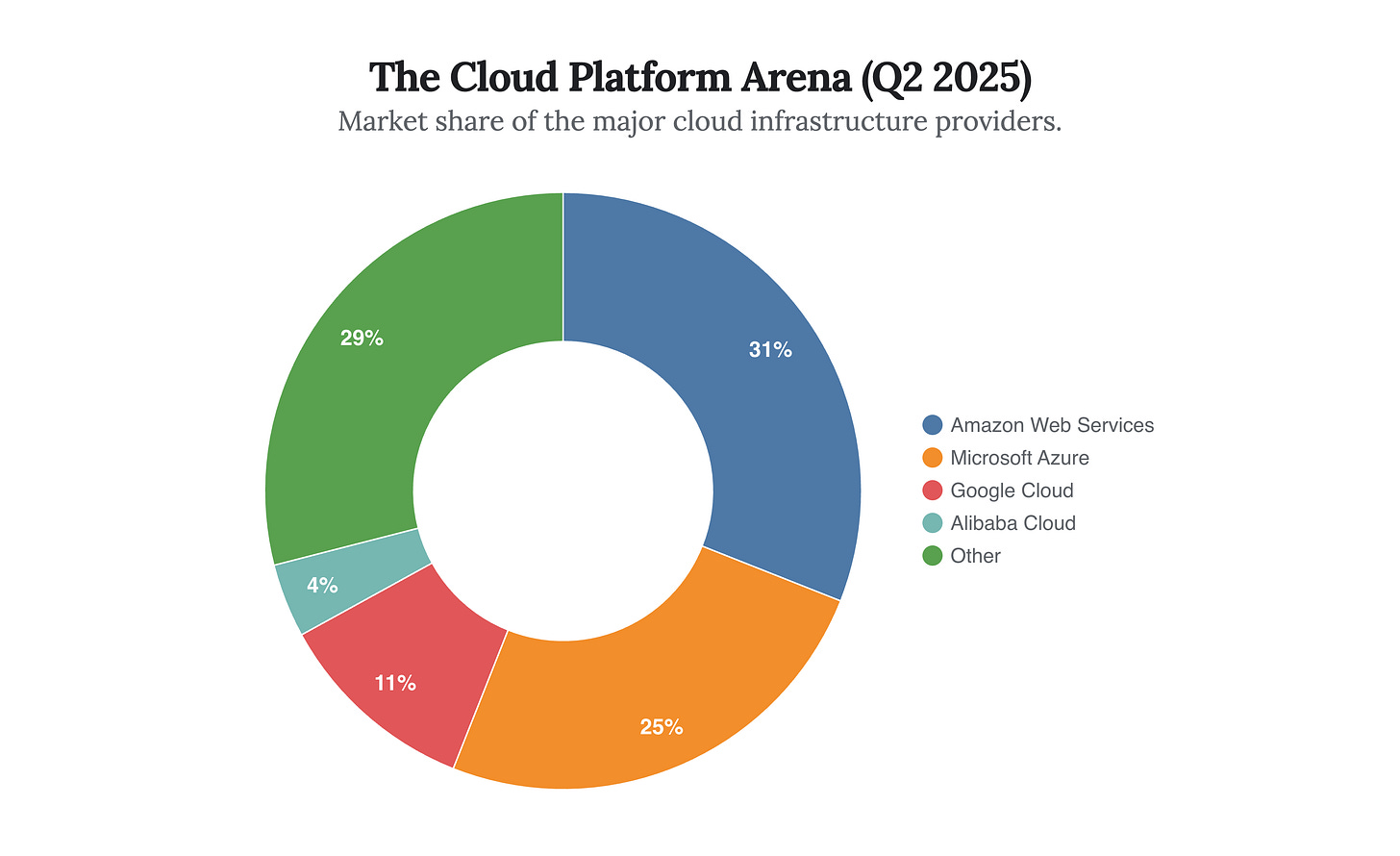

AWS remains the dominant force in the overall cloud market, providing a powerful foundation for its AI ambitions. The OpenAI deal is a strategic move to defend this lead by capturing the next wave of high-growth AI workloads, preventing them from migrating exclusively to challenger platforms like Microsoft Azure.

The War for the AI Platform Layer

While infrastructure is the foundation, the battle for dominance is increasingly moving up the stack to the AI platform layer—services like AWS Bedrock and Azure OpenAI Service that provide enterprises with access to a variety of foundation models. Before the megadeal, AWS’s strategy for Bedrock was to be a neutral marketplace, offering models from Anthropic, Meta, and others, with the notable exception of OpenAI’s flagship proprietary models. In August 2025, the first crack appeared when OpenAI released its first open-weight models, which were immediately made available on AWS, circumventing Microsoft’s exclusivity via their open-source license. This was a clear precursor to the larger partnership, allowing AWS to offer some flavor of OpenAI technology to its millions of customers for the first time.

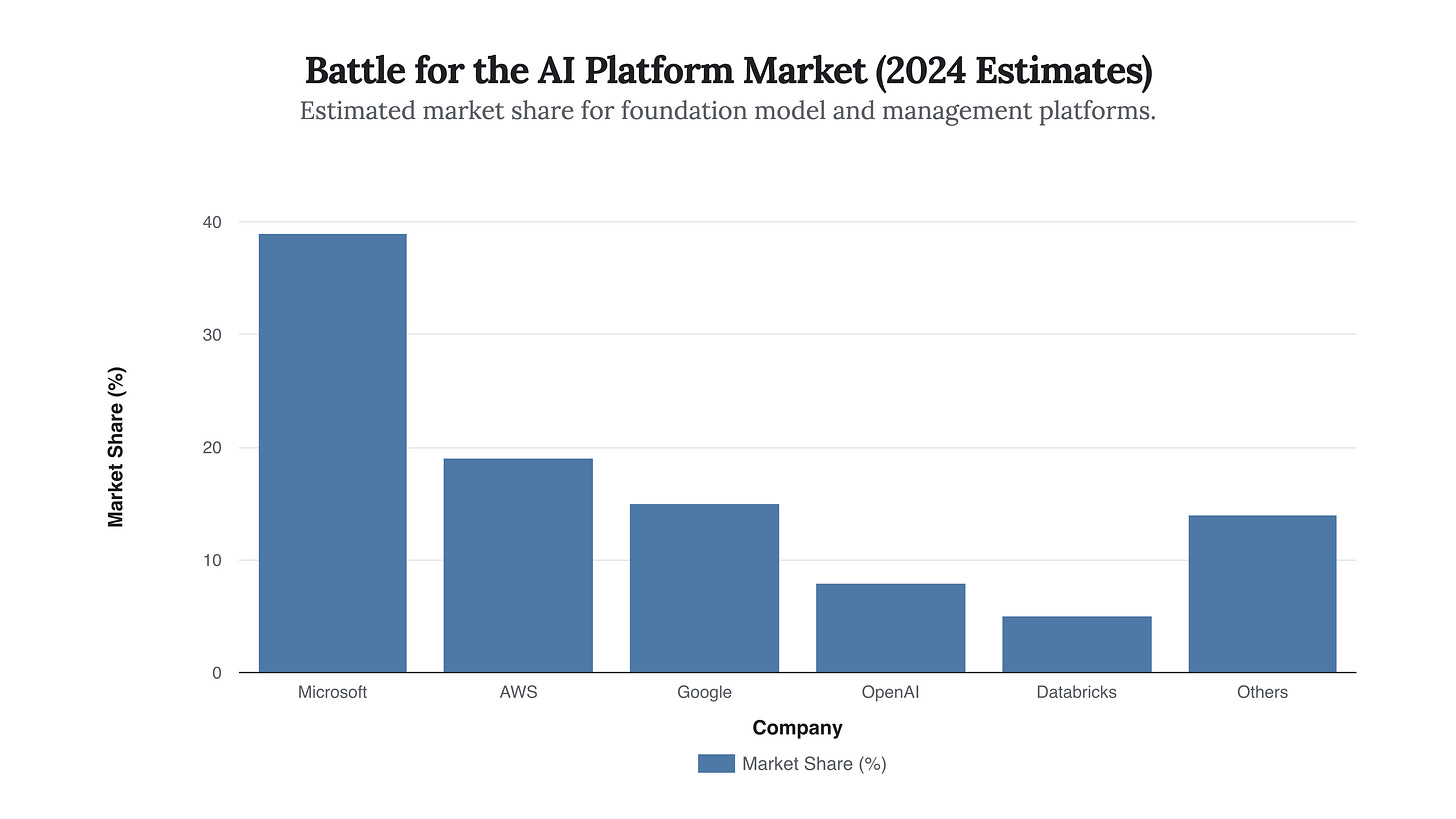

Microsoft established an early lead in the AI platform market, largely by leveraging its exclusive access to OpenAI’s models. The new AWS-OpenAI deal is a direct assault on this lead, enabling AWS to close the gap by offering the world’s most popular models natively on its platform.

Strategic Foresight: Second-Order Effects and Future Scenarios

The immediate impact of the deal is clear, but its second- and third-order effects will be even more transformative. Leaders must look beyond the headlines to understand how this shift will reshape enterprise AI adoption, the competitive dynamics between AI labs, and the very economics of cloud computing.

The Enterprise: The End of Vendor Lock-In?

For Chief Technology Officers and enterprise leaders, the deal is a liberating event. The fear of being locked into a single cloud ecosystem to access state-of-the-art AI is diminishing. This will accelerate a “best-of-breed” approach, where companies can run different models from different providers on the cloud infrastructure that best suits their needs for security, cost, and performance. We predict a surge in demand for multi-cloud management tools and a greater emphasis on model interoperability. The key challenge will shift from accessing models to orchestrating them effectively and managing the associated costs and complexities across different platforms.

“This agreement demonstrates why AWS is uniquely positioned to support OpenAI’s demanding AI workloads.”

- Matt Garman, CEO, AWS

The timeline reveals a rapid escalation of investment and strategic partnerships. What began as a singular, exclusive alliance between Microsoft and OpenAI has evolved into a full-blown arms race, with the AWS deal marking the most significant inflection point to date.

The AI Labs: A Race for Differentiation

With OpenAI models poised to become widely available on both Azure and AWS, the competitive pressure on other AI labs, particularly Anthropic, intensifies dramatically. Anthropic can no longer rely on being the marquee large language model on the world’s largest cloud provider. It will now have to compete head-to-head with OpenAI on its home turf. This will force Anthropic and others to accelerate their own research and differentiate on factors beyond raw performance, such as safety, customizability, efficiency, and cost. Amazon’s $8 billion investment in Anthropic is now more critical than ever, not as a kingmaker, but as a vital resource to help its champion compete in a newly crowded arena.

The major cloud providers have been fighting a proxy war through massive investments in leading AI startups. Microsoft’s deep financial integration with OpenAI is now mirrored by Amazon’s heavy backing of Anthropic, setting the stage for intense direct competition on both cloud platforms.

The Hyperscalers: The New Economics of AI Compute

This deal fundamentally changes the economics of the cloud. AI workloads are incredibly resource-intensive, driving massive demand for GPUs and specialized infrastructure. By securing a $38 billion commitment, AWS not only locks in a massive revenue stream but also gains the predictable demand necessary to justify its own colossal capital expenditures on next-generation data centers. However, it also introduces new pricing pressures. As the same leading models become available across multiple clouds, providers will be forced to compete more aggressively on the price and performance of the underlying infrastructure. We expect to see a new wave of innovation in custom silicon (like AWS’s Trainium and Inferentia chips) and pricing models designed specifically to attract and retain these hyper-scale AI workloads.

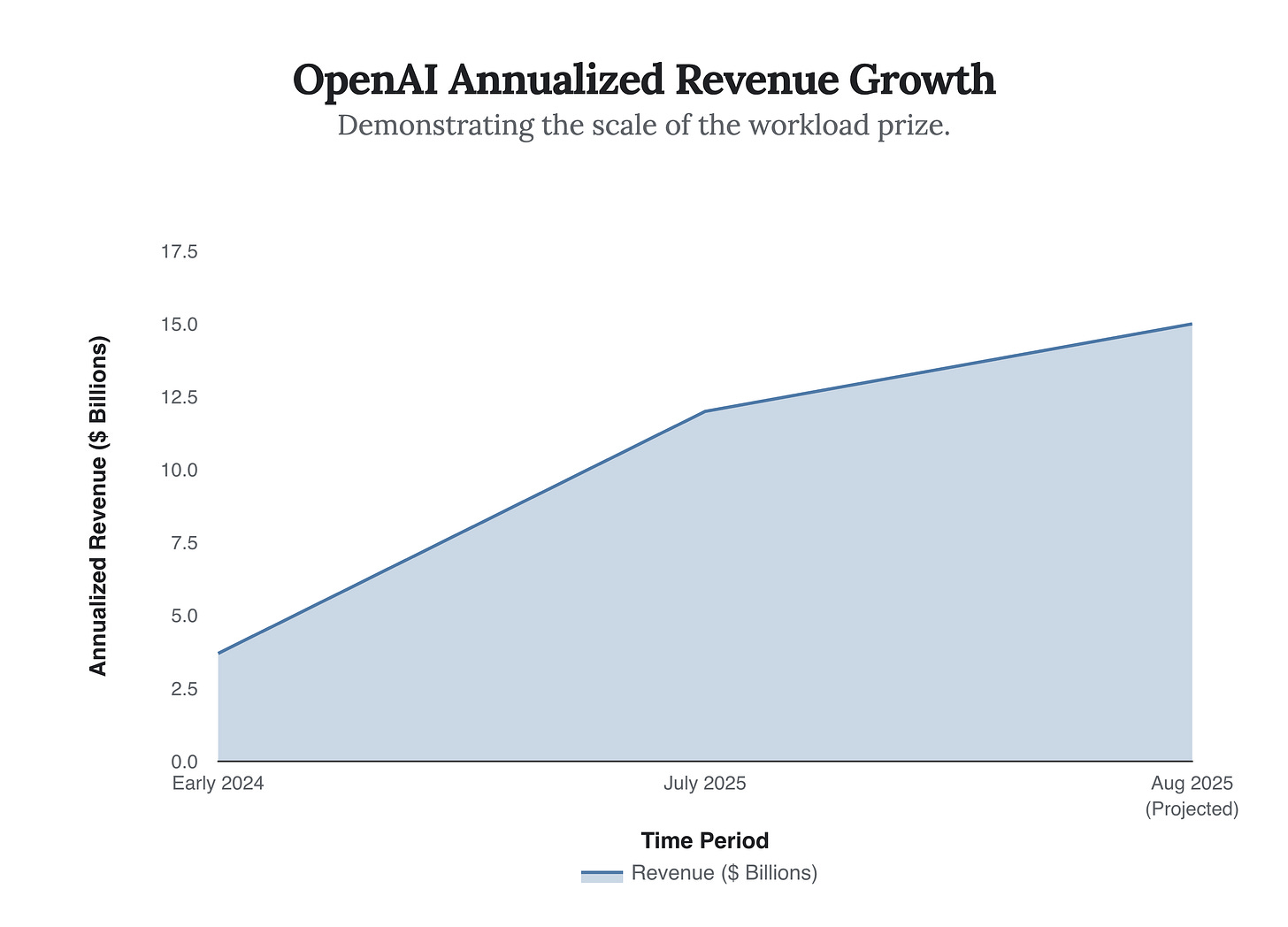

OpenAI’s explosive revenue growth highlights the immense commercial value of its models. Capturing even a portion of the infrastructure workload supporting this growth represents a multi-billion dollar opportunity for any cloud provider, justifying the scale of the AWS deal.

The Final Analysis: No Moats are Permanent

The OpenAI-AWS partnership is the most significant event in the cloud and AI industries this year. It formally ends the period of strategic monogamy and ushers in an era of fierce, multi-front competition for AI workloads. For Microsoft, it is a harsh reminder that even a $13 billion head start and a 27% equity stake do not guarantee perpetual loyalty; in the world of technology, moats are rarely permanent. For AWS, it is a masterstroke that leverages its core strength—scalable, reliable infrastructure—to reclaim its position at the center of the industry’s most important trend.

For the rest of the ecosystem, the message is clear: the AI landscape is becoming more open, more competitive, and more complex. The proliferation of leading models across multiple platforms will democratize access to cutting-edge AI, but it will also place a premium on strategic decision-making, technical orchestration, and cost management. The winners will be those who can navigate this new multi-polar world to build truly differentiated applications, unconstrained by the allegiance to a single platform.

The single most important strategic insight is that compute infrastructure has definitively replaced model exclusivity as the primary competitive vector in the AI arms race.