Anatomy of a Digital Fracture: Deconstructing the October 2025 Microsoft Azure Global Outage

A Strategic Intelligence Briefing on the Cascading Failures, Economic Fallout, and the Intensifying Debate on Cloud Concentration

The Week the Cloud Cracked: A Systemic Shock

On October 29, 2025, the digital scaffolding supporting a significant portion of the global economy buckled. A major global outage of Microsoft Azure, one of the world’s three dominant cloud computing platforms, triggered a cascade of disruptions that rippled across industries, from airlines and banking to government services and retail. The incident, lasting roughly eight hours, was not a malicious attack but a self-inflicted wound: an “inadvertent tenant configuration change” within Azure Front Door (AFD), a critical content delivery network service.

This seemingly minor error exposed profound vulnerabilities at the heart of our increasingly centralized digital infrastructure. Coming just days after a similar large-scale disruption at Amazon Web Services (AWS), the Azure outage has intensified a critical debate among policymakers and industry leaders about the systemic risks of cloud concentration and the urgent need for a new paradigm of digital resilience. This briefing deconstructs the anatomy of the failure, quantifies its immediate economic impact, and provides a strategic analysis of the long-term consequences for businesses and the cloud market itself.

Anatomy of the Failure: How One Change Toppled a Global Network

The October 29th outage originated from a flawed configuration change pushed to Azure Front Door, a service designed to accelerate the delivery of applications and content globally. This misconfiguration created an invalid state that caused a significant number of AFD nodes—the distributed servers that manage traffic—to fail. Compounding the initial error, Microsoft reported that its safety validation mechanisms, designed to block such erroneous deployments, failed due to a software defect, allowing the faulty configuration to propagate across the network.

The Domino Effect: From AFD to Global Shutdown

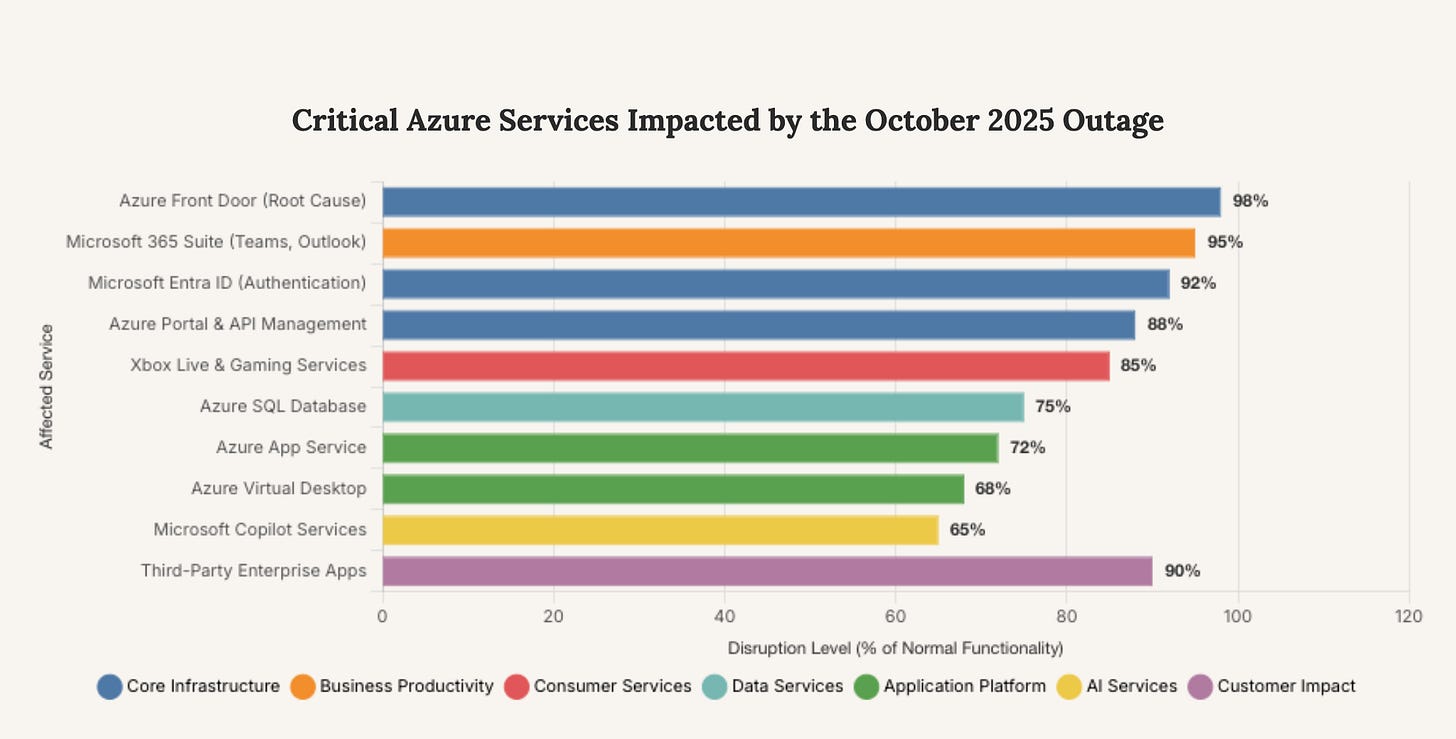

The initial failure within Azure Front Door triggered a cascading effect. As nodes failed, the remaining healthy nodes became overloaded, creating traffic imbalances that amplified the disruption. This meant that even regions not directly impacted by the initial bad configuration experienced service degradation. The problem was primarily a Domain Name System (DNS) failure; the system that translates human-readable web addresses into machine-readable IP addresses was effectively broken, making services inaccessible. The disruption was global, impacting users and businesses across the United States, Europe, and Asia. The list of affected services was extensive, spanning critical infrastructure and consumer platforms, including Microsoft 365, Outlook, Teams, Xbox Live, Microsoft Entra ID (formerly Azure Active Directory), and Azure SQL Database, among dozens of others.

This chart illustrates the severity of the impact across key Azure services, with core infrastructure like authentication and content delivery networks experiencing the most significant disruption, leading to widespread downstream failures in business and consumer applications.

The Economic Fallout and Ripple Effects

The immediate economic consequences of the outage were significant. Companies ranging from Alaska Airlines and Heathrow Airport to Starbucks and Vodafone reported major disruptions. Alaska Airlines experienced a system-wide disruption to its website and check-in services. In a striking example of real-world impact, a planned vote in the Scottish Parliament was suspended due to the technical issues. These events highlight the deep integration of cloud services into the machinery of both commerce and governance.

“The Microsoft Azure outage is yet another reminder of the weakness of centralised systems, just like last week when AWS went down and caused havoc. The trouble with big centralised systems... is that they suffer global outages because they have single points of failure.”

- Matthew Hodgson, CEO of Element

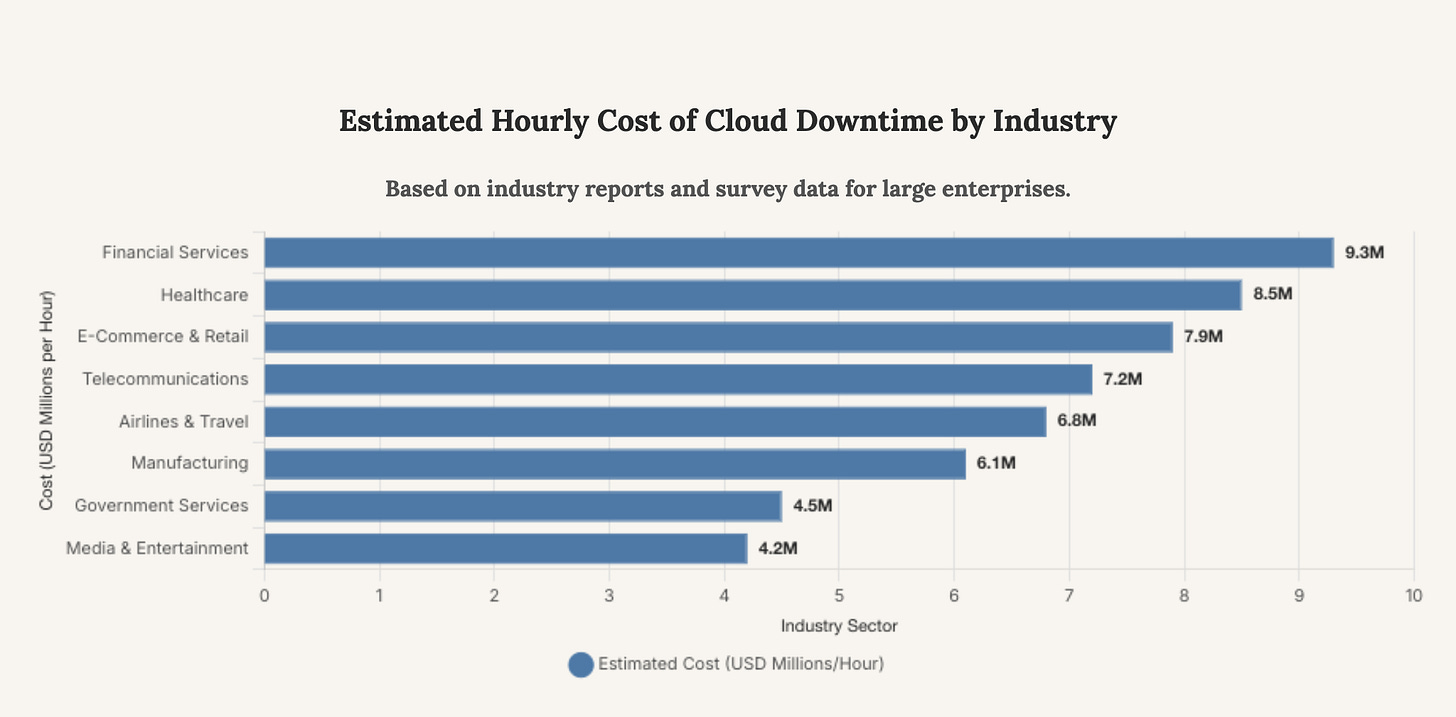

Estimating the total financial cost of such an outage is complex, but industry data provides a stark picture. Research indicates that for a majority of large companies, downtime costs can range from $300,000 to over $1 million per hour. A 2025 survey noted that 31% of corporate decision-makers considered just eight hours of cloud downtime to be “catastrophic.” Given the global scale and the number of major enterprises affected, the collective losses from the Azure outage likely ran into the hundreds of millions, if not billions, of dollars in lost revenue, productivity, and recovery costs.

This chart provides a conservative estimate of the direct financial impact per hour of a major cloud outage on various sectors, underscoring the critical dependence of high-transaction industries on cloud availability.

The Human Element: A Recurring Point of Failure

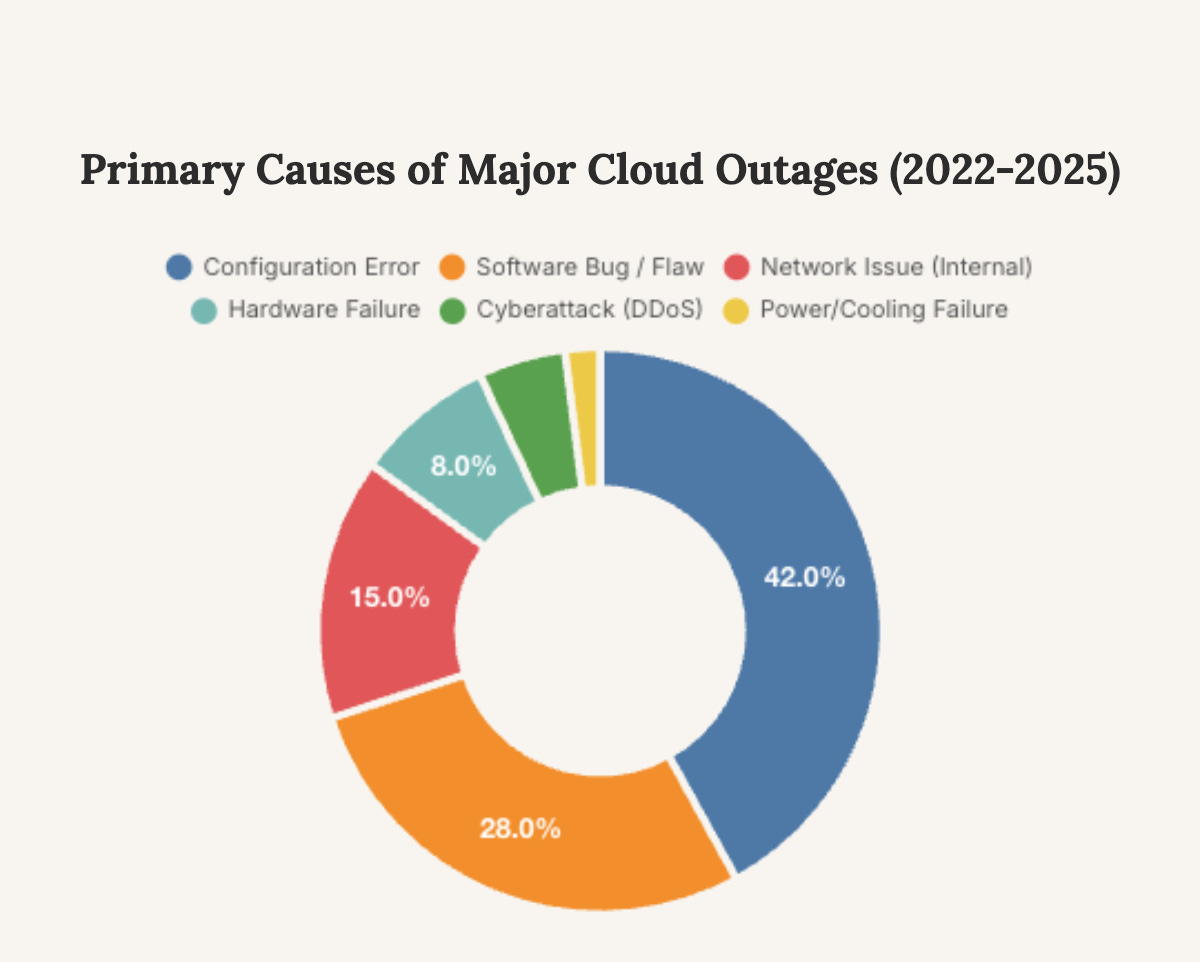

The root cause—a configuration error—underscores a persistent vulnerability in complex cloud systems: human error. While automation and safeguards are designed to prevent such incidents, this outage demonstrates that protection mechanisms can fail. A historical analysis of major cloud outages reveals that misconfigurations and flawed updates are a recurring theme. This pattern suggests that as cloud architectures grow in complexity, the potential for a single mistake to have global consequences increases, a risk that requires more than just technological solutions.

This chart breaks down the root causes of significant cloud service disruptions over the past three years. Human-driven configuration errors remain the single largest cause, highlighting a critical area for strategic improvement in operational protocols.

The Great Consolidation: Systemic Risk in a Three-Player Market

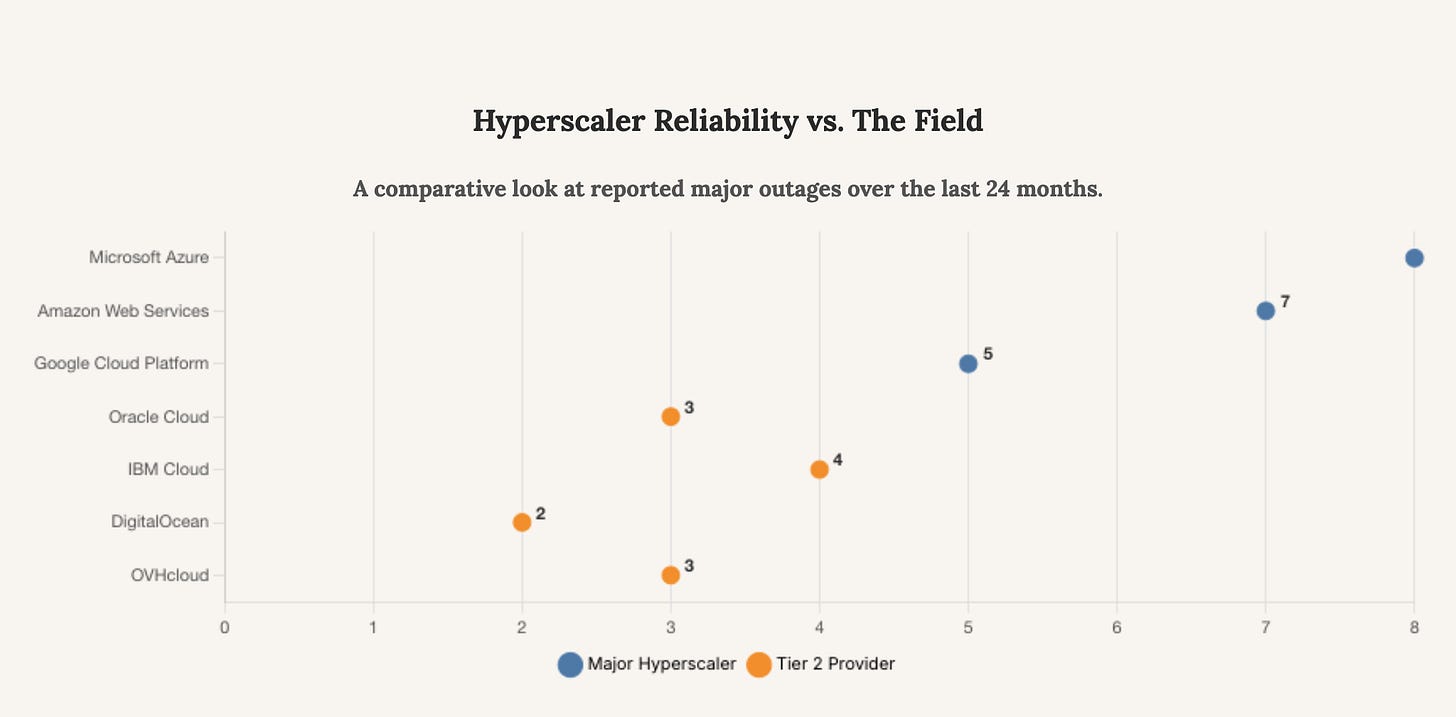

The back-to-back failures of AWS and Azure have thrown a harsh spotlight on the concentration of the cloud market. Together, AWS, Microsoft Azure, and Google Cloud control approximately 65% of the global cloud infrastructure market. This oligopoly creates a systemic risk where the failure of one provider can have a domino effect across the entire global economy. The incident has amplified calls from European cloud leaders and regulatory bodies for greater competition and digital sovereignty.

“This is very similar to the AWS outage of last week... Currently Amazon, Microsoft and Google have an effective triopoly on cloud services and storage, meaning that an outage of even part of their infrastructure can cripple hundreds, if not thousands, of applications and systems.”

- Dr. Saqib Kakvi, Royal Holloway, University of London

This market structure presents a strategic dilemma for enterprises. While the hyperscalers offer unparalleled scale and a rich ecosystem of services, vendor lock-in and the high cost of switching make diversification difficult. The outages serve as a critical reminder that a single-cloud strategy, regardless of the provider’s reputation, introduces a single point of failure that can be catastrophic.

The market is heavily consolidated, with AWS and Azure alone accounting for over half of the global cloud infrastructure. This concentration is the source of the systemic risk exposed by recent outages.

This dot plot compares the number of significant service disruptions among major cloud providers. While the top three have more incidents in absolute terms due to their scale, the data highlights that no provider is immune to failure, making resilience strategies essential.

Strategic Foresight: Navigating the Post-Outage Landscape

The October 2025 Azure outage is an inflection point. For enterprises, it necessitates a fundamental reassessment of cloud strategy. For Microsoft, it is a significant blow to its reputation for reliability, a key battleground in its competition with AWS and Google Cloud.

Imperatives for Enterprise Leaders

Embrace Multi-Cloud and Hybrid Architectures: The single-vendor dependency model is broken. CIOs must now prioritize architectural resilience through multi-cloud or hybrid-cloud strategies, designing critical applications to failover across different providers or between public and private clouds.

Scrutinize Vendor Safeguards: Leaders must demand greater transparency from cloud providers regarding their deployment processes, rollback capabilities, and the safeguards in place to prevent configuration errors. Service Level Agreements (SLAs) should be re-evaluated to account for the real-world costs of such widespread disruptions.

Invest in Observability, Not Just Monitoring: Simple up/down monitoring is insufficient. Businesses need deep observability platforms that can quickly diagnose the root cause of performance degradation, whether the fault lies within their own code or with an upstream cloud provider.

Signposts for the Future

Looking ahead, we anticipate several key developments. First, expect increased regulatory scrutiny of the cloud market, particularly in Europe, with a focus on competition and systemic risk. Second, the market for multi-cloud management and resilience tools will accelerate significantly. Finally, we predict that cloud providers will invest heavily in AI-driven operational management to predict and prevent configuration errors before they can cause cascading failures. The era of assuming hyperscale reliability is over; the era of architecting for resilience has begun.

The events of October 29th were not just a technical failure; they were a failure of imagination for the thousands of businesses that outsourced their digital foundations without a robust contingency plan. The cloud remains the engine of modern enterprise, but its vulnerabilities are now undeniably clear. The strategic challenge is no longer about moving to the cloud, but surviving its inevitable fractures.

The ultimate lesson of the October 2025 Azure outage is that in a centralized cloud ecosystem, resilience is no longer an architectural choice but the most critical competitive differentiator.